Table of Links

Abstract and 1 Introduction

-

Related Work

2.1. Multimodal Learning

2.2. Multiple Instance Learning

-

Methodology

3.1. Preliminaries and Notations

3.2. Relations between Attention-based VPG and MIL

3.3. MIVPG for Multiple Visual Inputs

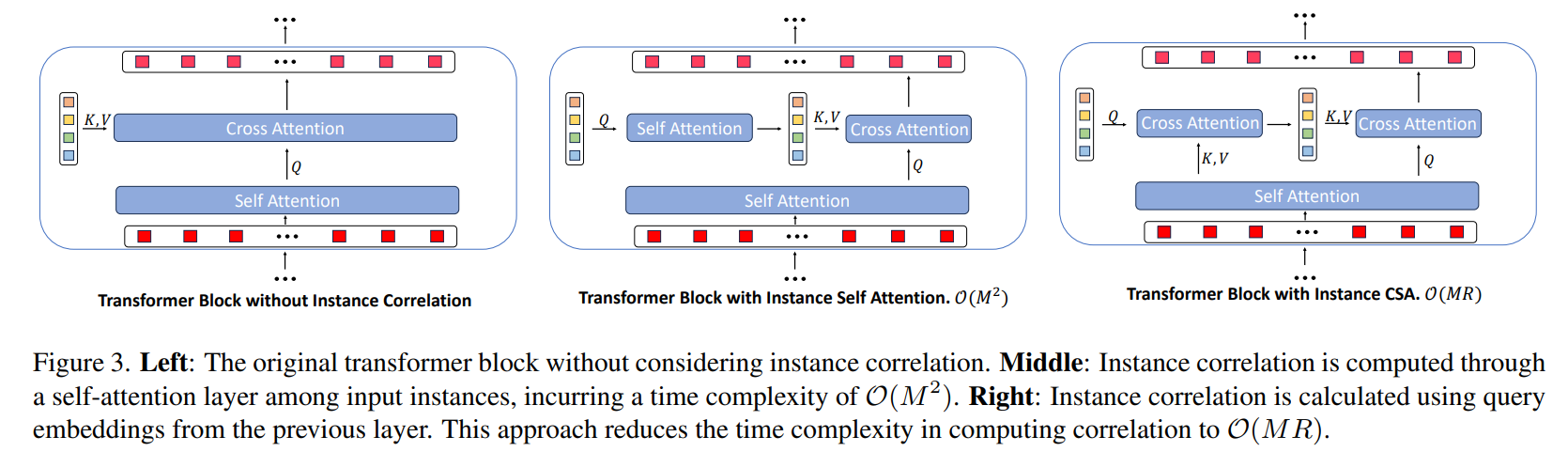

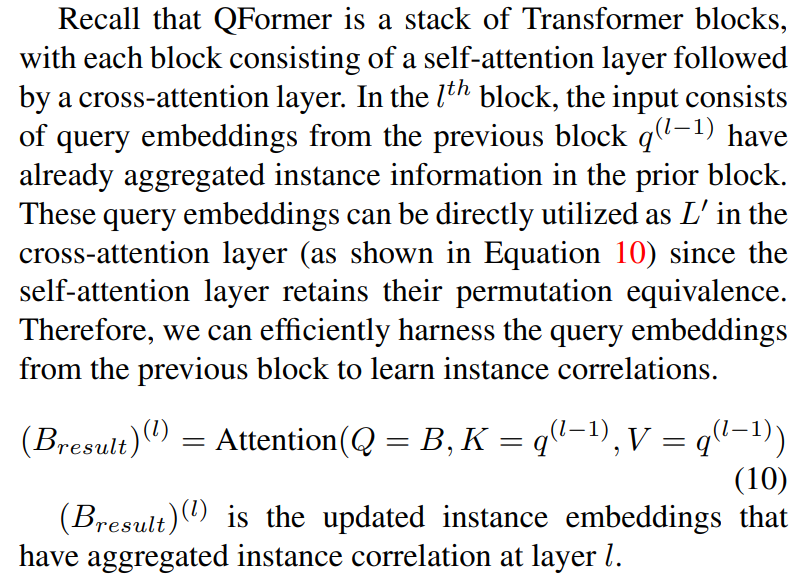

3.4. Unveiling Instance Correlation in MIVPG for Enhanced Multi-instance Scenarios

-

Experiments and 4.1. General Setup

4.2. Scenario 1: Samples with Single Image

4.3. Scenario 2: Samples with Multiple Images, with Each Image as a General Embedding

4.4. Scenario 3: Samples with Multiple Images, with Each Image Having Multiple Patches to be Considered and 4.5. Case Study

-

Conclusion and References

Supplementary Material

A. Detailed Architecture of QFormer

B. Proof of Proposition

C. More Experiments

3.4. Unveiling Instance Correlation in MIVPG for Enhanced Multi-instance Scenarios

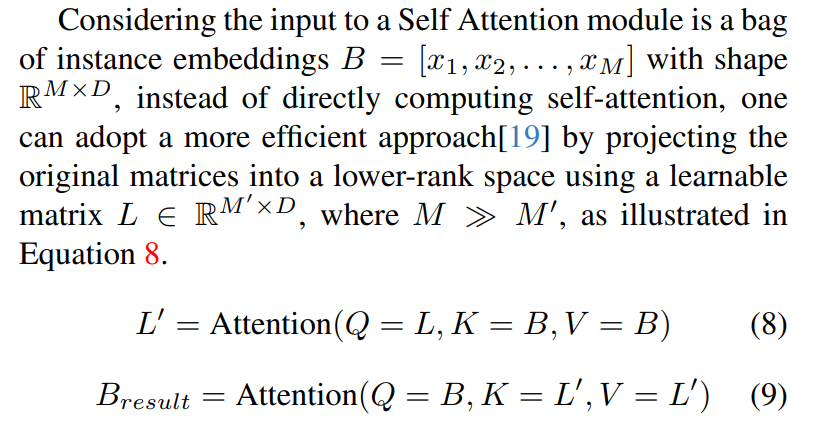

Subsequently, the aggregated low-rank matrix can be reintegrated with the original embeddings, as shown in Equation 9. This low-rank projection effectively reduces the time complexity to O(MM′).

Proposition 2. MIVPG, when equipped with the CSA (Correlated Self-Attention) module, continues to fulfill the essential properties of MIL

We prove the proposition 2 in the supplementary B.

In summary, as depicted in Figure 2a, we establish that QFormer falls under the MIL category and is a specialized instance of our proposed MIVPG. The latter extends to visual inputs with multiple dimensions, accounting for instance correlation.

:::info

Authors:

(1) Wenliang Zhong, The University of Texas at Arlington ([email protected]);

(2) Wenyi Wu, Amazon ([email protected]);

(3) Qi Li, Amazon ([email protected]);

(4) Rob Barton, Amazon ([email protected]);

(5) Boxin Du, Amazon ([email protected]);

(6) Shioulin Sam, Amazon ([email protected]);

(7) Karim Bouyarmane, Amazon ([email protected]);

(8) Ismail Tutar, Amazon ([email protected]);

(9) Junzhou Huang, The University of Texas at Arlington ([email protected]).

:::

:::info

This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::