Netflix replaced a CQRS implementation using Kafka and Cassandra with a new solution leveraging RAW Hollow, an in-memory object store developed internally. Revamped architecture of Tudum offers much faster content preview during the editorial process and faster page rendering for visitors.

Netflix launched Tudum, its official fan website, in late 2021, to provide a destination for Netflix users interested in additional content associated with the company’s shows. The architecture of the website was initially based on the Command Query Responsibility Segregation (CQRS) pattern to optimize read performance for serving content.

The write part of the platform was built around the 3rd-party CMS product, and had a dedicated ingestion service for handling content update events, delivered via a webhook. The ingestion service was responsible for converting CMS data into read-optimized page content by applying templates, as well as data validations and transformations. Read-optimized data would then be published to a dedicated Kafka topic.

Initial Tudum data architecture (Source: Netflix Engineering Blog)

On the query side, the page data service was responsible for consuming messages for the Kafka topic and storing the data in the Cassandra query database. The service utilized a near cache to improve performance, providing stored page data to the page construction service and other internal services.

The initial architecture benefited from the decoupling of read and write paths, allowing for independent scaling. However, due to the caching refresh cycle, CMS updates were taking many seconds to show up on the website. The issue made it problematic for content editors to preview their modifications and got progressively worse as the amount of content grew, resulting in delays lasting tens of seconds.

Eugene Yemelyanau, technology evangelist, and Jake Grice, staff engineer at Netflix, describe the cause for delays in retrieving content for displaying due to caching:

Regardless of which system modifies the data, the cache is updated with each refresh cycle. If you have 60 keys and a refresh interval of 60 seconds, the near cache will update one key per second. This was problematic for previewing recent modifications, as these changes were only reflected with each cache refresh. As Tudum’s content grew, cache refresh times increased, further extending the delay.

Eventually, the team decided to revamp the architecture to eliminate the delays in previewing content updates, ideally. Engineers chose to leverage RAW Hollow, a homegrown in-memory object database. Netflix designed the database to handle small to medium datasets and support strong read-after-write consistency. RAW Hollow allows the entire dataset to reside in the memory of each application process within a cluster, offering low latency and high availability. The database provides eventual consistency by default but supports strong consistency at the individual request level.

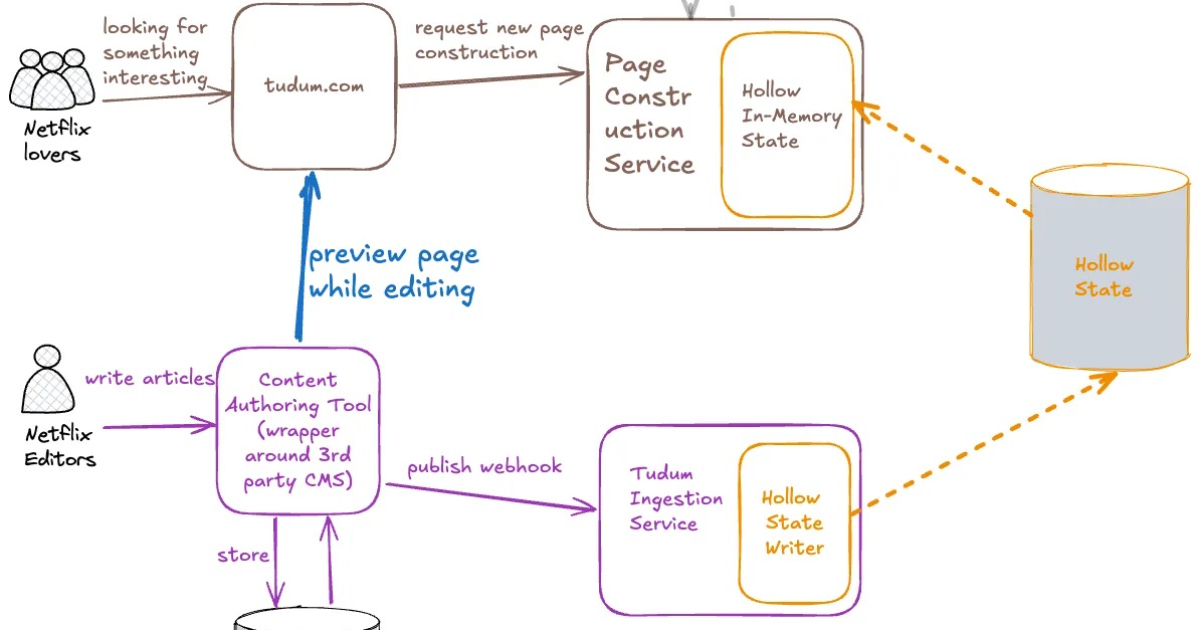

Revised Tudum data architecture (Source: Netflix Engineering Blog)

Tudum engineers replaced Kafka and Cassandra with the RAW Follow cluster, spanning the ingestion and page construction service instances. The team concluded that, for the use case at hand, the CQRS design pattern wasn’t the optimal approach, and using a distributed, in-memory object store suited the situation better. The new solution eliminated cache invalidation problems as the entire dataset could fit into the application’s memory, helped by Hollow’s data compression, reducing the data size to 25% of the uncompressed size in the Apache Iceberg table.

As a result of the architecture revamp and supporting data migration, the platform benefited from a significant reduction in data propagation times and page construction due to reduced request I/O and round-trip times. Tudum engineers believe that the new architecture offers the best of both worlds for editors and visitors.