Table of Links

Abstract and 1 Introduction

2 Related Work

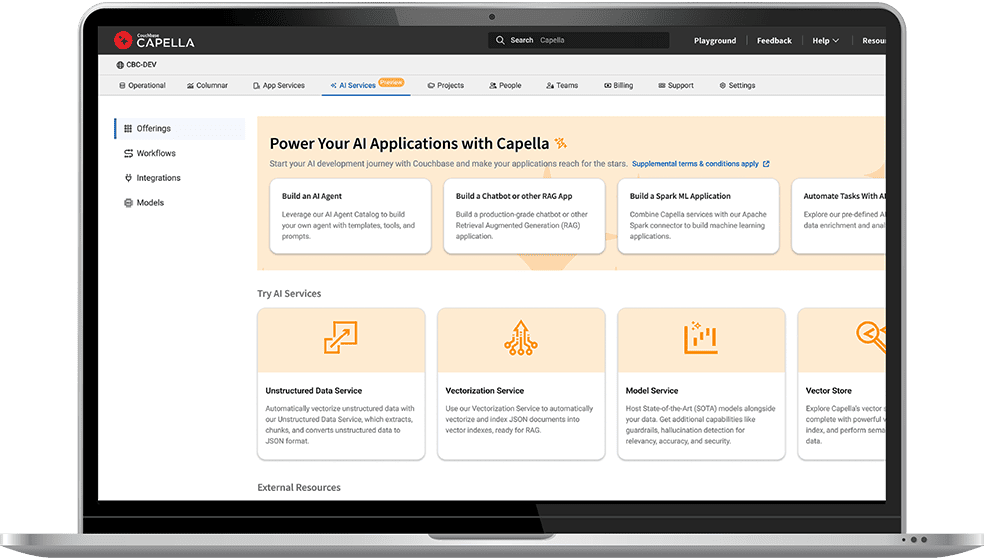

3 Model and 3.1 Associative memories

3.2 Transformer blocks

4 A New Energy Function

4.1 The layered structure

5 Cross-Entropy Loss

6 Empirical Results and 6.1 Empirical evaluation of the radius

6.2 Training GPT-2

6.3 Training Vanilla Transformers

7 Conclusion and Acknowledgments

Appendix A. Deferred Tables

Appendix B. Some Properties of the Energy Functions

Appendix C. Deferred Proofs from Section 5

Appendix D. Transformer Details: Using GPT-2 as an Example

References

7 Conclusion

We model transformer-based networks with associative memory and study the cross-entropy loss with respect to model and data sizes. By proposing a new energy function in Eq. 5, which does not rely on additional regularization terms as is common in modern continuous Hopfield networks, we demonstrate that the proposed energy function corresponds to a nearest neighbor search across patterns memorized during training. We then construct a global energy function for the layered structure of the transformer models using the majorization-minimization technique.

In practice, we have observed that the majority of transformer models tend to achieve a cross-entropy loss of approximately 2.2. The optimal balance between model and data sizes, however, is often determined by the collective expertise of practitioners. Additionally, the performance of these models can be compromised by both early and delayed stopping.

We believe the current paper represents an important step towards understanding the convergence and generalization behaviors of large transformer models. It provides insights into the theoretically optimal cross-entropy loss, which can inform both budgetary planning and model termination strategies.

Acknowledgments

The author thanks Dr. Yongqi Xu for stimulating discussions and practical assistance with the experiments.

Authors:

(1) Xueyan Niu, Theory Laboratory, Central Research Institute, 2012 Laboratories, Huawei Technologies Co., Ltd.;

(2) Bo Bai baibo ([email protected]);

(3) Lei Deng ([email protected]);

(4) Wei Han ([email protected]).

This paper is