The Allen Institute for Artificial Intelligence has launched Olmo 3, an open-source language model family that offers researchers and developers comprehensive access to the entire model development process. Unlike earlier releases that provided only final weights, Olmo 3 includes checkpoints, training datasets, and tools for every stage of development, encompassing pretraining and post-training for reasoning, instruction following, and reinforcement learning.

Language models are often seen as snapshots of a complex development process, but sharing only the final result misses the crucial context needed for modifications and improvements,

Ai2 stated in their announcement. Olmo 3 resolves this by offering visibility into the entire model lifecycle, allowing users to inspect reasoning traces, modify datasets, and experiment with post-training techniques like supervised fine-tuning (SFT) and reinforcement learning with verifiable rewards (RLVR).

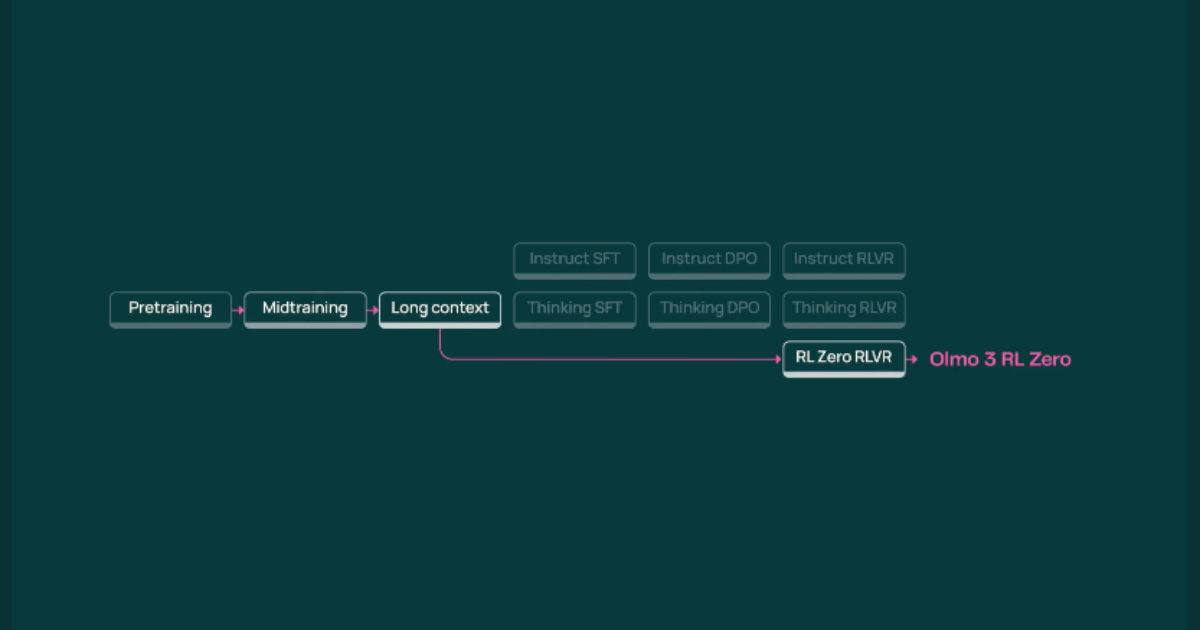

At the heart of the release is Olmo 3-Think (32B), a reasoning-focused model that allows developers to inspect intermediate reasoning steps and trace outputs back to the training data. For smaller setups, the 7B variants of Olmo 3-Base, 3-Think, and 3-Instruct deliver high performance on coding, math, and multi-turn instruction tasks, all while being runnable on modest hardware. The post-training pathways facilitate different use cases: Instruct is designed for chat and tool use, Think is intended for multi-step reasoning, and RL Zero focuses on reinforcement learning research.

The Olmo 3 family demonstrates strong performance across benchmarks. On math and reasoning tests, Olmo 3-Think (32B) matches or outperforms other open-weight models like Qwen 3 and Gemma 3, while Olmo 3-Instruct (7B) excels in instruction following, function calling, and chat. Ai2 highlights that the models maintain quality at extended context lengths, supporting applications that require reasoning over tens of thousands of tokens.

One early reviewer commented:

I expect and hope for this sub to cheer Olmo more. This is a truly free and open model, with all the data for anyone with the resources to build it from scratch. We should definitely cheer and let those folks know their efforts are truly appreciated to keep them going.

Ai2 also emphasized transparency in training and tooling. The release includes Dolma 3, a 9.3-trillion-token corpus, and Dolci, a post-training dataset suite for reasoning, tool use, and instruction-following tasks. Tools like OlmoTrace let users see how outputs connect to specific training data, closing the gap between model behavior and its sources.

Another Reddit user noted the speed of progress:

They are making so much progress in such a short time. They basically caught up to the open-weight labs. These guys are cooking, and a truly open-source is no longer in the ‘good effort’ territory.

The release aims to foster experimentation and community engagement. Researchers can fork models at any checkpoint, integrate domain-specific data, or test new reinforcement learning objectives. All models, datasets, and training artifacts are licensed permissively, supporting fully open development for research, education, and applied AI projects.

Olmo 3 represents a bold step toward open-first AI, where transparency, traceability, and community collaboration are central. Users can explore the models in the Ai2 Playground, access weights via OpenRouter, or download all checkpoints and datasets to build their own systems.