On October 6, 2025 OpenAI hosted DevDay 2025 where the company introduced with new tooling for agents, AgentKit, and shipped fresh model options, GPT-5 Pro and Sora 2 in the API. The day was themed with the idea of making a chatbot a place where software runs, collaborates, and sells inside the conversation itself.

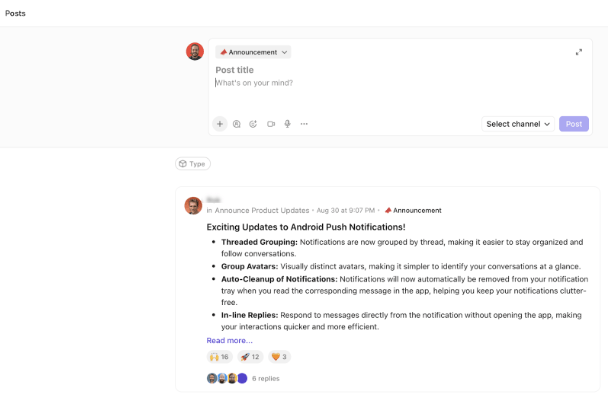

The biggest shift was “apps inside ChatGPT.” With a preview Apps SDK, third-party software can render interactive UI directly in the chat and share context through Model Context Protocol. In demos a user designed a poster with Canva, then pivoted into a Zillow map without leaving the thread. OpenAI said an app directory and a submission review process are coming, with monetization guidance to follow later this year. The pitch is a new distribution channel that uses chat as the runtime, not just the interface.

OpenAI stacked the launch with recognizable partners. Booking.com, Canva, Coursera, Expedia, Figma, Spotify, and Zillow all showed live experiences running in chat. Financial press noted minimal stock reactions overall, though some partners saw pops around the keynote. The broader takeaway is that OpenAI is courting mainstream services to anchor a credible software surface in ChatGPT, while the market waits to see if this becomes habitual end-user behavior.

On the “do work” axis OpenAI formalized agents. AgentKit packages a visual Agent Builder, a Connector Registry for governing data sources, ChatKit for embeddable agent UIs, and integrated evaluation and tracing so teams can observe workflows and improve them. This is the plumbing most orgs end up writing themselves: orchestration, guardrails, metrics, and versioning. OpenAI also highlighted reinforcement fine-tuning that is GA on o4-mini and in private beta on GPT-5.

For teams that need portability, the company emphasized a self-hosted route. The open-source Agents SDK in Python and TypeScript exposes a small set of primitives for agents, handoffs, guardrails, and sessions, with built-in tracing. That makes it plausible to design flows in OpenAI’s UI and operate them on your own stack with the same semantics and observability, which is often the compliance requirement in regulated environments.

Codex moved to general availability. The keynote narrative positioned it less as code-completion and more as a building block for longer running “do it for me” workflows that can reason across project context, call tools, and return working artifacts. Model options expanded at the top and the edges. GPT-5 Pro arrived in the API and sat beside cheaper real-time models aimed at voice and latency-sensitive use cases. Coverage praised better reasoning and accuracy relative to earlier releases, but communities also flagged uneven latency and consistency. If you are replacing task conduits, not just assistants, those tails matter more than median performance and will drive how you route between high-effort and fast-path models.

OpenAI formalized the Sora app and opened the new model to developers, while the news cycle focused on copyright, deepfake concerns, and watermark handling. If you intend to pipe Sora 2 into commercial pipelines, plan for provenance, consent, and content review from the start rather than bolting them on later. Hardware stayed in the rumor zone, with a fireside chat with Jony Ive teased the philosophy behind new devices while reporting around the event suggested delays and unresolved design tradeoffs.

MCP-style connectors expand attack surface and raise exfiltration risk when agents move freely between tools. Researchers have already demonstrated connector exploits, while OpenAI’s guidance stresses least privilege, explicit consent, and defense in depth. Treat connectors as production integrations, keep audit trails, and assume prompt injection will reach your edges. The upside of the new tracing and evals is that you can see and score behavior rather than relying on anecdotes.

Sessions on interactive evaluations and ARC-AGI-3 emphasized action efficiency and first-run generalization over static paper benchmarks. For engineering managers, that translates to building eval sets that look like your real workflows, grading full traces, and optimizing the slowest agents in the chain rather than the average.

Developers looking to learn more can start with the official DevDay recap and product posts, then work through the Apps SDK, AgentKit, and Agents SDK docs and session recordings referenced above to replicate the demos and adapt them to real workloads.