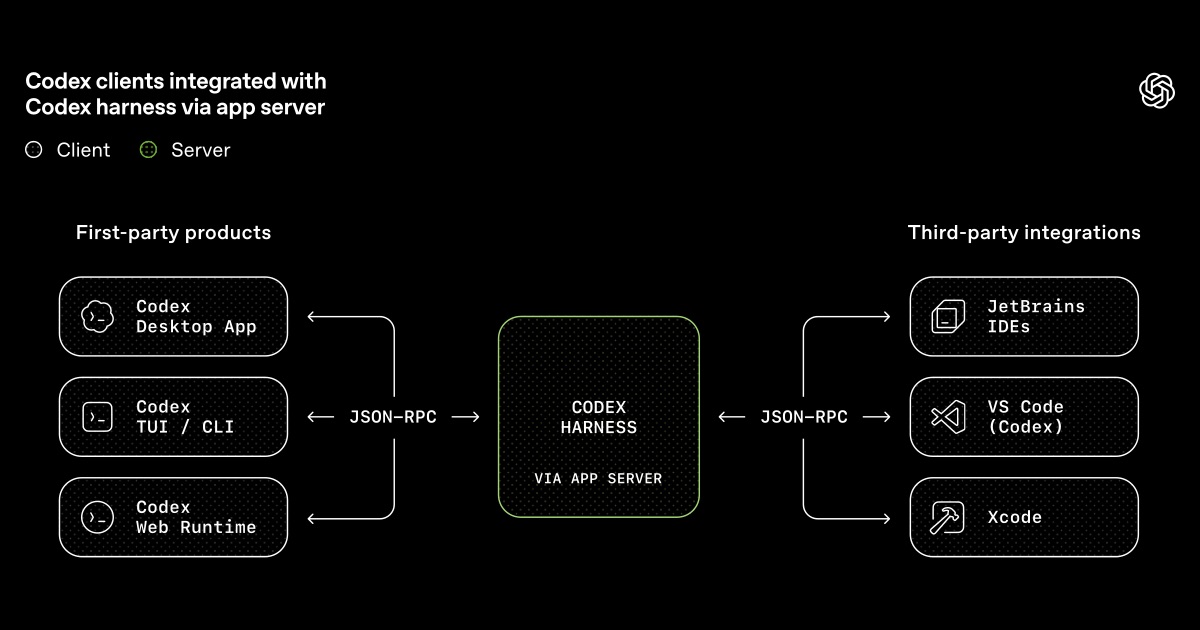

OpenAI has recently published a detailed architecture description of the Codex App Server, a bidirectional protocol that decouples the Codex coding agent’s core logic from its various client surfaces. The App Server now powers every Codex experience, including the CLI, the VS Code extension, the web app, the macOS desktop app, and third-party IDE integrations from JetBrains and Apple’s Xcode, through a single, stable API.

The post, written by OpenAI engineer Celia Chen, is part of an ongoing series detailing the internals of the open-source Codex CLI. The central challenge, as Chen describes it, is that agent interactions are fundamentally different from simple request/response exchanges:

One user request can unfold into a structured sequence of actions that the client needs to represent faithfully: the user’s input, the agent’s incremental progress, artifacts produced along the way.

The Codex App Server’s internal architecture: the stdio reader and Codex message processor translate client JSON-RPC requests into Codex core operations and transform internal events back into stable, UI-ready notifications (Source)

To model this, OpenAI designed three conversation primitives. An Item is the atomic unit of input or output with an explicit lifecycle of “started”, optional streaming “delta” events, and “completed”. This can be a user message, an agent message, a tool execution, an approval request, or a diff. A Turn groups the sequence of items produced by a single unit of agent work, initiated by user input. A Thread is the durable container for an ongoing session, supporting creation, resumption, forking, and archival with persisted event history so clients can reconnect without losing state.

The protocol also supports server-initiated requests. When the agent needs approval before executing a command, the server sends a request to the client and pauses the turn until it receives an “allow” or “deny” response. Communication uses JSON-RPC streamed as JSONL over stdio, and OpenAI has designed it to be backward compatible so older clients can safely talk to newer server versions.

Notably, OpenAI tried and rejected the Model Context Protocol (MCP) before arriving at this design. Chen explains that when building the VS Code extension, the team initially experimented with exposing Codex as an MCP server, but “maintaining MCP semantics in a way that made sense for VS Code proved difficult.” The richer session semantics required for IDE interactions, such as streaming diffs, approval flows, and thread persistence, did not map cleanly onto MCP’s tool-oriented model. OpenAI still supports running Codex as an MCP server for simpler workflows, but recommends the App Server for full-fidelity integrations.

Protocol message flow for tool execution with optional approval. The server pauses the turn and sends a request to the client, which must respond with ‘allow’ or ‘deny’ before the agent can proceed (Source)

The article describes three deployment patterns used by clients to embed the App Server. Local clients like the VS Code extension and desktop app bundle a platform-specific binary, launch it as a child process, and keep a bidirectional stdio channel open. Partners like Xcode decouple release cycles by keeping the client stable while pointing to a newer App Server binary, allowing them to adopt server-side improvements without waiting for a client release.

The Codex Web runtime takes a different approach: a worker provisions a container, launches the App Server inside it, and the browser communicates via HTTP and Server-Sent Events — keeping the browser-side UI lightweight. At the same time, the server remains the source of truth for long-running tasks.

The App Server’s evolution parallels broader industry efforts to standardize agent-editor communication. The Agent Client Protocol (ACP), initiated by Zed Industries and now supported by JetBrains, takes a complementary approach: where OpenAI’s App Server is a protocol specific to the Codex harness, ACP aims to be a universal standard for connecting any coding agent to any editor, drawing a direct analogy to how the Language Server Protocol standardized language tooling a decade ago. Codex CLI itself is listed among ACP-compatible agents. The coexistence of these approaches reflects an industry still determining the right abstraction boundaries for agent integration — a space OpenAI acknowledges is “evolving quickly.”

All source code for the Codex App Server is available in the open-source Codex CLI repository, and the protocol documentation includes schema generation tools for TypeScript and JSON Schema to facilitate client bindings in any language.