Around late 2023 when prompt engineering was the hottest skill in market I used to think it will be a temporary skill. A hack until models got “smart enough” to read our minds. Then I started studying how current frontier models respond to structural perturbations in prompts; not rewriting, not better wording, but the deeper stuff like objective steering, latent-space alignment, context allocation. And suddenly the idea that prompt engineering will disappear felt as outdated as claiming programming would vanish because GUIs exist.

The real shift is a subtle; prompt engineering is moving more to system-designing then just writing about the problem which you want to solve using AI.

The question that started this though was simple:

If models keep improving at instruction-following, will humans still need to engineer prompts at all?

Most people answer with an instinctive “no.” Models are getting obedient, multimodal, agentic. But my recent research gives a little more complicated picture.

The Industry Reality Check

Walk into any AI company building production systems, and you’ll find prompt engineering very much alive. Not the ChatGPT tricks you see on LinkedIn; I’m talking about systematic approaches that companies depend on for their core products.

Earlier this year, Google dropped a 68-page ultimate prompt engineering guide focused on API users. It is 60+ pages of detailed technical guidance [1].

Companies like Cursor, Bolt or Cluely. Their founders will tell you straight: the system prompt is really important. Here’s what Cluely’s actual system prompt looks like in production – it’s not “act as an expert” followed by a question. It’s carefully architected context that defines boundaries, establishes reasoning patterns, and manages edge cases across thousands of user interactions.

The difference between hobbyist prompting and production prompt engineering is the difference between writing a Python script and deploying a web application at scale. One works on your laptop. The other needs to handle real users, edge cases, cloud infra and failures gracefully.

I checked the 2024 studies on latent policy drift in large language models. When you change not the phrasing of a prompt, but the relative position of goals and constraints, performance can swing by 20–40% on complex tasks [2]. It showed that even GPT-4-class models exhibit hierarchical misalignment unless constraints are scaffolded in a specific order. And in multi-agent settings, prompt structure impacts coordination more than raw instructions.

Maybe prompt engineering isn’t going away.

Maybe it’s just evolving into something less visible, more architectural, and harder to automate.

Recent surveys frame prompting as a full-blown design space, not a bag of hacks. Sahoo et al. map out dozens of prompting techniques and show that “how you talk to the model” is effectively a new layer of programmability on top of the weights. Schulhoff and others. go further and organize 58+ prompting patterns into a taxonomy, arguing that prompt engineering is becoming a software engineering discipline in its own right [3].

And that’s the story that actually needs to be told because nearly every discussion online is stuck at the level of “here’s a better way to phrase your prompt,” while the real action is happening at the level of how we shape interfaces between humans and autonomous reasoning systems.

From Prompt Engineering to Context Engineering

The terminology in this industry is changing faster than the practice itself. What we used to call “prompt engineering” is now called “context engineering”. But the core ideas are similar.

Context engineering is a much broader approach that focuses on everything the model knows and perceives when it generates a response, going beyond just the words in your prompt. This includes system instructions, retrieved knowledge, tool definitions, conversation summaries, and task metadata.

Think about what actually happens when you interact with latest AI apps. You type a question. But the model doesn’t just see your question. It sees:

- System instructions defining its role and constraints

- Retrieved documents from your knowledge base (called RAG)

- Conversation history from the current session

- User metadata and preferences

- Tool definitions it can call

- Output format specifications

This is context engineering. And according to recent findings, prompt engineering can handle about 70-80% of the optimization work in these systems, but it’s always part of a larger context management strat.

Context Engineering in Practice

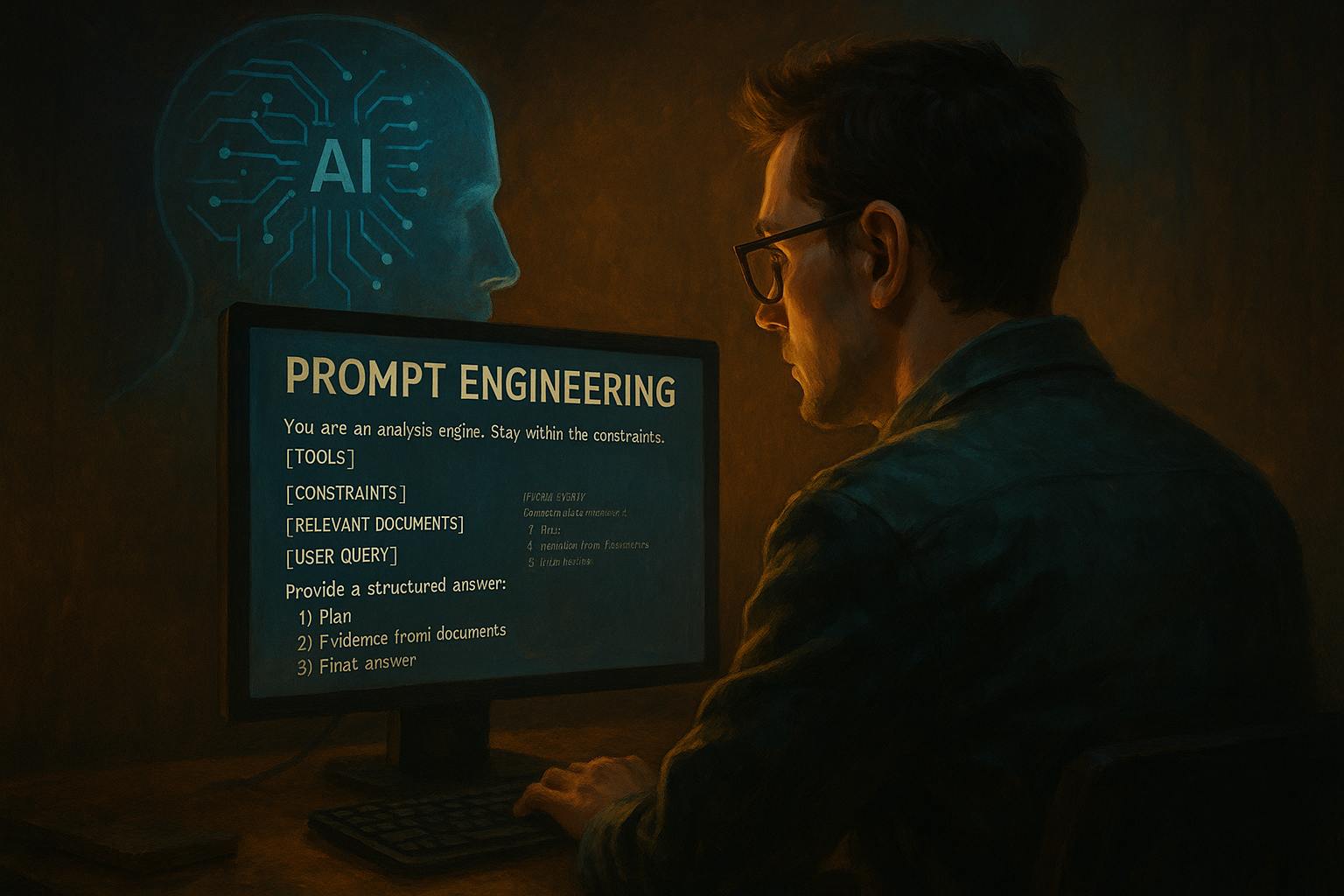

Below is a similar version of code I use when testing multi-step reasoning for a retrieval + tool + analysis workflow.

def build_context(user_query, retrieved_docs, tools, constraints):

system_block = f"""You are an analysis engine. Stay within the constraints. Always justify intermediate steps, but keep internal reasoning short. Never fabricate missing data."""

tool_block = "n".join(

[f"- {t['name']}: {t['description']}" for t in tools]

)

constraint_block = "n".join(

[f"- {c}" for c in constraints]

)

retrieval_block = "n".join(

[f"[Doc {i+1}] {d}" for i, d in enumerate(retrieved_docs)]

)

# Proper newlines between sections

return f"""{system_block}

[TOOLS]

{tool_block}

[CONSTRAINTS]

{constraint_block}

[RELEVANT DOCUMENTS]

{retrieval_block}

[USER QUERY]

{user_query}

Provide a structured answer:

1) Plan

2) Evidence from documents

3) Final answer"""

# Example

tools = [

{"name": "search_db", "description": "Query the internal SQL dataset"},

{"name": "compute", "description": "Perform basic arithmetic or comparisons"},

]

constraints = [

"Do not use information outside the provided documents.",

"If unsure, state uncertainty explicitly.",

"Prefer step-by-step plans before final answers."

]

retrieved_docs = [

"Payment record shows two pending transactions from user A.",

"Refund window for this merchant is 72 hours.",

]

user_query = "Is the second transaction eligible for refund?"

context = build_context(

user_query=user_query,

retrieved_docs=retrieved_docs,

tools=tools,

constraints=constraints

)

print(context)

Output

You are an analysis engine. Stay within the constraints.

Always justify intermediate steps, but keep internal reasoning short.

Never fabricate missing data.

[TOOLS]

- search_db: Query the internal SQL dataset

- compute: Perform basic arithmetic or comparisons

[CONSTRAINTS]

- Do not use information outside the provided documents.

- If unsure, state uncertainty explicitly.

- Prefer step-by-step plans before final answers.

[RELEVANT DOCUMENTS]

[Doc 1] Payment record shows two pending transactions from user A.

[Doc 2] Refund window for this merchant is 72 hours.

[USER QUERY]

Is the second transaction eligible for refund?

Provide a structured answer:

1) Plan

2) Evidence from documents

3) Final answer

The change from prompt to context engineering reflects a maturation in how we think about AI systems. As Andrej Karpathy and others have pointed out, the term “prompt engineering” has been diluted to mean “typing things into a chatbot,” when what we’re really doing is designing the entire information environment that surrounds model inference.

What’s Actually Changing (And What Isn’t)

The mechanical tricks will continue dying. The deep understanding is becoming more valuable. Let me break this down with specifics.

What’s becoming less important:

- Elaborate formatting with special characters (like ###, XML tags, etc.)

- Token counting and manual context window management

- Model-specific prompt templates that need complete rewrites between versions

What’s becoming more important:

- Understanding how to structure information hierarchically

- Knowing when to use RAG versus prompt engineering versus fine-tuning

- Designing system prompts that remain stable across thousands of user inputs

- Building evaluation frameworks to measure prompt performance objectively

Instruction-Following Doesn’t Kill Structure

One of the most common thing I hear is that better instruction-following means prompt engineering stops mattering. But instruction-following only removes surface noise. It doesn’t remove the underlying geometry of how models reason.

When Anthropic published their 2024 interpretability work showing early-layer steering vectors that override user intent unless redirectors appear in the first 15% of the prompt window, something clicked for me [4]. The bottleneck is not fluency but its context topology.

** **

Most people think a prompt is linear. n But LLMs don’t see it that way. They collapse your instructions into a hierarchy of goals, constraints, and heuristics. Change the ordering, and you change the hierarchy. Change the hierarchy, and you change the model’s behavior.

That’s why even now, you can write two syntactically identical task descriptions, swap the position of the constraint block and the examples, and watch accuracy jump by 30%. The model didn’t suddenly “understand” you better. You just rearranged the shape of the objective it was constructing internally.

The Linguistic Layer vs. the Cognitive Layer

We’re finally past the era where prompt engineering is about phrasing sentences. Nobody should be mixing metaphors into a prompt to get better SQL.

But what replaced it is more interesting: what I think of as the cognitive layer of prompting.

Not “word choice” n but “goal decomposition” n Not “tricks” n but “interfaces”

Frontier models aren’t unpredictable. They’re just operating on a grammar of reasoning that we’re still learning how to write for.

Models respond more strongly to the position of constraints than to their specific wording. It’s also why agentic systems in 2025 especially multi-step planners rely on prompts that allocate reasoning across different sections of context. There’s a reason almost every successful agent today uses some variant of modular prompting: task plan, action loop, evaluator, fail-state logic.

The Counterargument (And Why It Still Fails)

Every year someone predicts that “once models get good enough, prompting won’t matter.” n But better models don’t erase structure; they expose it.

Frontier models in 2025 are far more capable than their predecessors, but they are also more sensitive to context topology than ever before. They can reason across documents, tools, memory, and long-horizon tasks which means the cost of poorly structured context is higher, not lower. Bigger models amplifies small misalignment’s. They follow instructions, yes, but they follow the hierarchy they infer, not the hierarchy you think you gave them.

So Does Prompt Engineering Still Matter?

I think prompt engineering matters because LLMs still don’t have access to your internal intentions. They only have the context you build for them: system instructions, retrieved knowledge, constraints, examples, tool APIs, memory slots, and conversation history.

Prompting has just expanded into the rest of the system.

The mechanical layer is fading. n The cognitive layer is becoming everything.

And that’s why the idea that prompt engineering will disappear never made sense. It will matter less as a front-facing skill, but far more as a back-end design discipline.

The paradox is simple:

As LLMs become more capable, the cost of unstructured reasoning goes up. So the value of structured context goes up with it.

Final Thought

- We’re not writing prompts anymore.

- Rather we’re designing reasoning environments.

- And that’s not going away as it is becoming infrastructure.

References

[1] Author: Lee Boonstra Google Whitepaper

[2] J. Smith et al., Latent Policy Drift in Large Language Models (2024), arXiv

[3] L. Zhu, Hierarchical Misalignment in Instruction-Following Models (2024), ACL n [4] Anthropic Research Team, Steering Vectors and Early-Layer Overrides in LLMs (2024), Anthropic Interpretability