Meta’s PyTorch team has unveiled Monarch, an open-source framework designed to simplify distributed AI workflows across multiple GPUs and machines. The system introduces a single-controller model that allows one script to coordinate computation across an entire cluster, reducing the complexity of large-scale training and reinforcement learning tasks without changing how developers write standard PyTorch code.

Monarch replaces the traditional multi-controller approach, in which multiple copies of the same script run independently across machines, with a single-controller model. In this architecture, one script orchestrates everything — from spawning GPU processes to handling failures — giving developers the illusion of working locally while actually running across an entire cluster.

The PyTorch team describes it as bringing “the simplicity of single-machine PyTorch to entire clusters.” Developers can use familiar Pythonic constructs — functions, classes, loops, futures — to define distributed systems that scale seamlessly, without rewriting their logic to handle synchronization or failures manually.

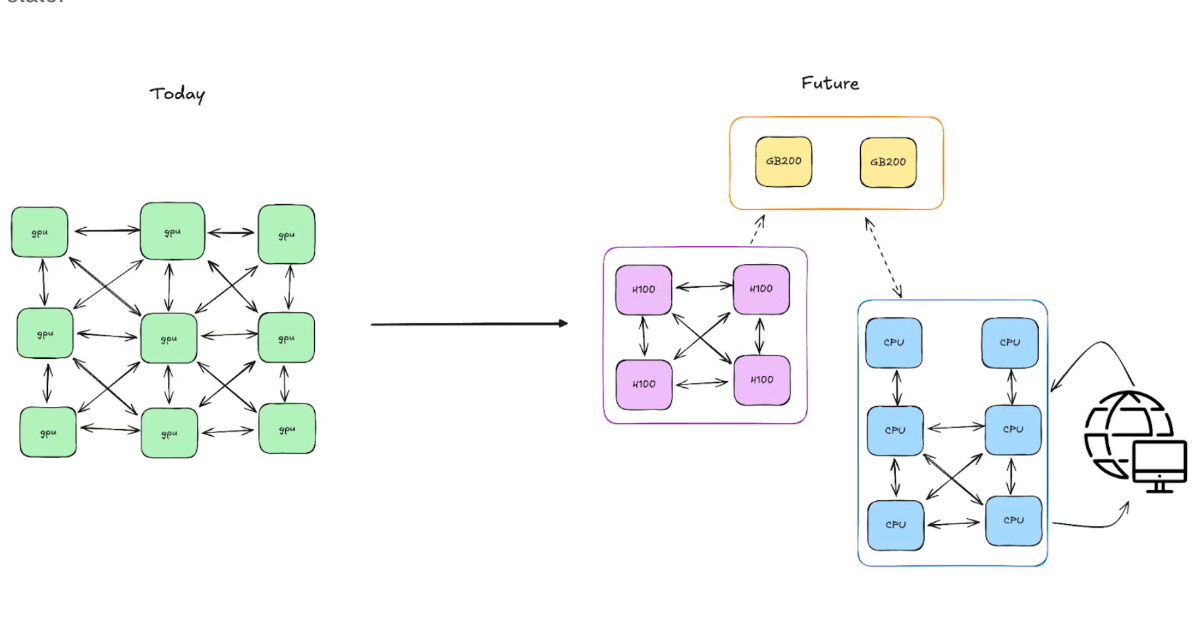

At its core, Monarch introduces process meshes and actor meshes, scalable arrays of distributed resources that can be sliced and manipulated like tensors in NumPy. That means developers can broadcast tasks to multiple GPUs, split them into subgroups, or recover from node failures using intuitive Python code. Under the hood, Monarch separates control from data, allowing commands and large GPU-to-GPU transfers to move through distinct optimized channels for efficiency.

Source: PyTorch Blog

Developers can even catch exceptions from remote actors using standard Python try/except blocks, progressively layering in fault tolerance. Meanwhile, distributed tensors integrate directly with PyTorch, so large-scale computations still “feel” local even when running across thousands of GPUs.

The system’s backend is written in Rust, powered by a low-level actor framework called hyperactor, which provides scalable messaging and robust supervision across clusters. This design allows Monarch to use multicast trees and multipart messaging to distribute workloads efficiently without overloading any single host.

The release has drawn attention from practitioners in the AI community. Sai Sandeep Kantareddy, a senior applied AI engineer, wrote:

Monarch is a solid step toward scaling PyTorch with minimal friction. Curious how it stacks up in real-world distributed workloads—especially vs. Ray or Dask. Would love to see more on debugging support and large-scale fault tolerance. Promising start!

Monarch is now available as an open-source project on GitHub, complete with documentation, sample notebooks, and integration guides for Lightning.ai. The framework aims to make cluster-scale orchestration as intuitive as local development, giving researchers and engineers a smoother path from prototype to massive distributed training.