Table Of Links

ABSTRACT

I. INTRODUCTION

II. BACKGROUND

III. DESIGN

- DEFINITIONS

- DESIGN GOALS

- FRAMEWORK

- EXTENSIONS

IV. MODELING

- CLASSIFIERS

- FEATURES

V. DATA COLLECTION

VI. CHARACTERIZATION

- VULNERABILITY FIXING LATENCY

- ANALYSIS OF VULNERABILITY FIXING CHANGES

- ANALYSIS OF VULNERABILITY-INDUCING CHANGES

VII. RESULT

- N-FOLD VALIDATION

- EVALUATION USING ONLINE DEPLOYMENT MODE

VIII. DISCUSSION

- IMPLICATIONS ON MULTI-PROJECTS

- IMPLICATIONS ON ANDROID SECURITY WORKS

- THREATS TO VALIDITY

- ALTERNATIVE APPROACHES

IX. RELATED WORK

CONCLUSION AND REFERENCES

VIII. DISCUSSION

This section discusses the implications for multi-project use and the previous Android security works; threats to validity; and alternative approaches.

A. IMPLICATIONS ON MULTI-PROJECTS

This subsection explores adapting the VP framework for multi-project use cases. There are two options for model training. One is to train a separate model on data from each project and apply it individually. The other is to identify project-agnostic features to build a global model applicable across multiple projects. The latter option differs from [5], which explored applying the training data of a single project to different projects. Since the latter global model option is described in Subsection VII.A, this subsection focuses on discussing the former option.

Project-Specific Model. The VP framework is adaptable to any open-source project with tracked vulnerability issues (e.g., CVEs). For a target project, the framework follows a sequence of: (1) identifying vulnerability issues, corresponding VfCs, and ViCs; (2) deriving a feature dataset from the identified data; and (3) training a classifier model, which is continuously updated as new vulnerabilities are discovered.

While using the VP framework itself does not require a high density of ViCs, effective evaluation of the framework against a target project does. A project with many discovered CVEs indicates a high density of ViCs. Thus, the experiment in Subsection VI.B targets the AOSP frameworks/av project, known for its abundance of CVEs (e.g., over 350). It is because extensive fuzzing and other security testing were conducted on that project (e.g., between 2015 and early 2016 prior to the Android Nougat release). As fuzz testing expands to other Android components (e.g., native servers and hardware abstraction modules), we believe that future studies can leverage our experiment method in order to more comprehensively evaluate the accuracy of the VP framework or a new variant technique across a wider range of AOSP projects.

B. IMPLICATIONS ON ANDROID SECURITY WORKS

Although it is not the primary theme of this paper, another key contribution is the definition and measurement of vulnerability fixing latency distributions per major Android release. The measurement of vulnerability fixing latency has two implications:

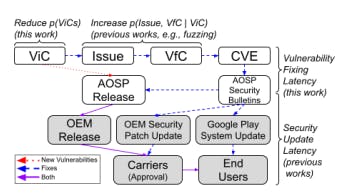

Vulnerability-fixing latency is longer than software update latency in the Android device ecosystem. Our metric focuses on capturing the time it takes to detect and fix vulnerabilities. Figure 9 illustrates the overall Android software update process, beginning when a vulnerabilityinducing change (ViC) is merged into a repository. While some ViCs are detected (i.e., as issues in Figure 9) and fixed

internally, others remain undetected and are included in AOSP releases, which are then shipped to end-user devices through OEMs and carriers. After the initial release, those vulnerabilities are discovered and fixed, and then published as CVEs and on AOSP security bulletins. Our vulnerability fixing latency metric measures the time from the ViC merge to the publication of fixes (i.e., VfC in Figure 9) and CVEs on AOSP security bulletins.

A significant portion of prior work focuses on reducing and measuring the time to update in-market end-user devices with available fixes. Existing studies on security updates [41][42][43][44][50] primarily measure the time from AOSP security bulletins to end-user OTA (over the air) updates. That security update time is considerably shorter than the vulnerability fixing latency reported in this study. For example, [42] indicates that updates can take ~24 days for devices with monthly security updates [41] and 41-63 days for Android OEM devices with quarterly or biannual updates when considering the update frequency, device model, and regional factors. Given that the software update time is relatively short compared to vulnerability fixing latency, our data suggests the Android security research community should focus more on reducing vulnerability fixing latency to optimize the end-to-end latency from vulnerability induction to end-user device updates.

Efforts to prevent vulnerabilities are justified given the difficulty of identifying and fixing vulnerabilities after introduction.In addition to measurement, existing Android security work focuses on accelerating both: (1) the software update process (such as TREBLE and Mainline14) and (2) the vulnerability identification process. TREBLE modularizes the Android platform, allowing for fast updates of the silicon vendor-independent framework by OEMs. Mainline enables updates to the key Android system-level services and modules via Google Play Store system app updates (see Google Play System Update in Figure 9). Extensive fuzzing and other security testing efforts are employed to increase vulnerability detection and accelerate fixes [45][46][47][48]. Those previous works aim to increase the probability of

identification and fixes given a vulnerability-inducing change, P(Issues, Fixes | ViC), as shown in Figure 9. However, they do not directly reduce P(ViC) itself. The vast AOSP codebase and high frequency of code changes across various software abstraction layers for different hardware components and devices make it difficult to keep up with new vulnerabilities – as captured by the vulnerability fixing latency data.

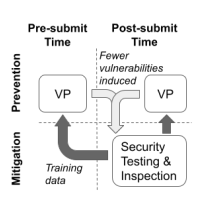

This paper thus introduces the concept of pre-submit, Vulnerability Prevention (VP) at the upstream software project level, as an alternative approach. VP aims to directly reduce P(ViC) – the probability of having vulnerabilityinducing changes. We demonstrate that our VP approach could have been effectively used to protect a key AOSP project from the historical ViCs. The cost of using VP is also relatively small (e.g., in terms of computing power) compared with some existing security testing techniques, such as fuzzing and dynamic analysis.

C. THREATS TO VALIDITY

This subsection analyzes potential threats to the validity of this study, including a discussion of underlying assumptions.

The N-fold validation experiments in this study assume timely discovery of ViCs (vulnerability-inducing changes). It highlights an iterative process depicted in Figure 10. That is, the improved knowledge of ViCs enhances the VP accuracy, which then aids in the ViC prevention or discovery in both upstream (pre- or post-submit) and downstream projects (e.g., before integrating upstream patches or releasing new builds). It implies that ViCs prevented by our VP framework (e.g., with revisions made in response to flagging) can be incorporated into model training to further reinforce its accuracy.

To bootstrap our VP process, initial extensive fuzzing is crucial. In practice such preventive security testing usually targets software components known to be vulnerabilityprone – a decision guided by security engineering considerations.

This study also uses the frameworks/av component as it meets this criterion. Alternatively, one could start with our global VP model for high precision detection of a subset of ViCs. The resulting initial ViC dataset can then be used to train project-specific VP models by leveraging a wider range of feature data types than the global model.

This study implicitly assumes a focus on C/C++ projects, which are a major source of AOSP vulnerabilities. Java would have slightly different characteristics, e.g., affecting the TM (Text Mining) feature set, potentially requiring customization of effective features. This study also assumes an AOSP-like development model with registered partners (e.g., employees from public companies) as primary contributors. We recognize that volunteer-driven opensource projects (e.g., with fewer, non-obligated developers and reviewers) would present different dynamics.

Furthermore, the commercial use of Android on billions of devices drives a strong focus on code quality among its developers. The commitment to quality is evident in many other upstream and downstream open-source projects, although often to a lesser degree. Thus, while optimal feature sets vary across open source projects, the data in this study shows us that the core VP approach (consisting of the framework, tools, feature types, training process, and inference mode) remains broadly applicable within the upstream open source domain, understanding the high cost of fixing vulnerabilities merged downstream.

The accuracy of results depends also on the algorithm used to identify ViCs from VfCs (vulnerability-fixing changes). The manual analysis of random samples used to refine our algorithm generally showed that identified ViCs, at minimum, touch code lines near where the respective vulnerabilities are introduced. While it can be difficult to definitively link a code change to a vulnerability (or other semantically-sophisticated defects), changes made in close proximity to the vulnerability-inducing lines are more likely to be noticed during code review than those made farther away.

D. ALTERNATIVE APPROACHES

This study also considered some alternative approaches:

Using the HH (Human History) features, one can identify a subset of software engineers who have reviewed a sufficient number of code changes without missing a significant number of ViCs. When the VP framework flags a code change, it can then be assigned to one of those trusted reviewers for approval. It offers an alternative way to establish a secure code reviewer pool, especially when a dedicated internal security expert group is unavailable.

Requiring a security test case for every fixed CVE issue offers clear benefits (e.g., to confirm corresponding VfC and detect regressions). However, it is important to acknowledge the practical limitations. Recent AOSP CVE fixes often come with the proof-of-concept (PoC) exploits that developers can leverage to reproduce and validate their fixes. Those PoC security tests can either exploit vulnerabilities or confirm the mitigation effectiveness when that is feasible with high success probability. In practice, we recognize that mandating security tests for every CVE (or VfC) is resource intensive and cannot be universally adopted across projects and organizations due to variations in engineering cultures and processes.

While it is possible to write and run a comprehensive set of security test cases for pinpointing ViCs across historical commits, this alternative approach has inherent limitations. Using a bisecting algorithm to identify the first or last ViC still incurs significant costs compared to our ML-based approach. It is particularly true because vulnerabilities often manifest only on specific devices. It necessitates testing on a wide range of devices unless affected devices can be precisely identified. However, access to such devices is often limited. Furthermore, the reliability of test results is compromised if the security tests exhibit flakiness (i.e., sometimes failing even when they should be passing).

:::info

Author:

- Keun Soo Yim

:::

:::info

This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::