Table of Links

Abstract and 1 Introduction

2 Related Work

3 SUTRA Approach

3.1 What is SUTRA?

3.2 Architecture

3.3 Training Data

4 Training Multilingual Tokenizers

5 Multilingual MMLU

5.1 Massive Multitask Language Understanding

5.2 Extending MMLU to Multiple Languages and 5.3 Consistent Performance across Languages

5.4 Comparing with leading models for Multilingual Performance

6 Quantitative Evaluation for Real-Time Queries

7 Discussion and Conclusion, and References

ABSTRACT

In this paper, we introduce SUTRA, multilingual Large Language Model architecture capable of understanding, reasoning, and generating text in over 50 languages. SUTRA’s design uniquely decouples core conceptual understanding from language-specific processing, which facilitates scalable and efficient multilingual alignment and learning. Employing a Mixture of Experts framework both in language and concept processing, SUTRA demonstrates both computational efficiency and responsiveness. Through extensive evaluations, SUTRA is demonstrated to surpass existing models like GPT-3.5, Llama2 by 20-30% on leading Massive Multitask Language Understanding (MMLU) benchmarks for multilingual tasks. SUTRA models are also online LLMs that can use knowledge from the internet to provide hallucination-free, factual and up-to-date responses while retaining their multilingual capabilities. Furthermore, we explore the broader implications of its architecture for the future of multilingual AI, highlighting its potential to democratize access to AI technology globally and to improve the equity and utility of AI in regions with predominantly non-English languages. Our findings suggest that SUTRA not only fills pivotal gaps in multilingual model capabilities but also establishes a new benchmark for operational efficiency and scalability in AI applications.

1 Introduction

Devlin et al., 2018]. These models have been instrumental in a variety of applications, ranging from conversational agents to complex decision support systems. However, the vast majority of these models predominantly cater to English, which is not only limiting in terms of linguistic diversity but also in accessibility and utility across different geographic and cultural contexts [Jia et al., 2019].

Addressing the challenge, multilingual LLMs have been developed, but these models often suffer from significant trade-offs between performance, efficiency, and scalability, particularly when extending support across a broader spectrum of languages [Conneau et al., 2020]. The most common approach has been to train large universal models capable of understanding multiple languages. Yet, these models, such as BLOOM and Llama2, typically underperform in languages that are less represented in the training data due to the difficulty of balancing language-specific nuances [Smith et al., 2021, Zhang et al., 2020]. The development of SUTRA was motivated by the inherent limitations in existing multilingual LLMs. On the one hand there are language-specific LLMs like HyperClova in Korean or OpenHaathi in Hindi. Scaling and managing such models is not only costly, but challenging due to the exponential data and training requirements. Each time a new base model is created, it would require fine-tuning for many different languages. On the other hand large traditional LLMs like BLOOM and Llama2 struggle on multilingual tasks, as they have to balance learning core multilingual capabilities and skills, often resulting in confusion between languages. For example, when asking GPT a question in Korean, one might notice how formal and informal tones are often misplaced. SUTRA was developed to address two main challenges of existing multilingual LLMs: the high computational/scaling costs of language-specific models, and the difficulties larger models face with multilingual tasks (leading to language confusion).

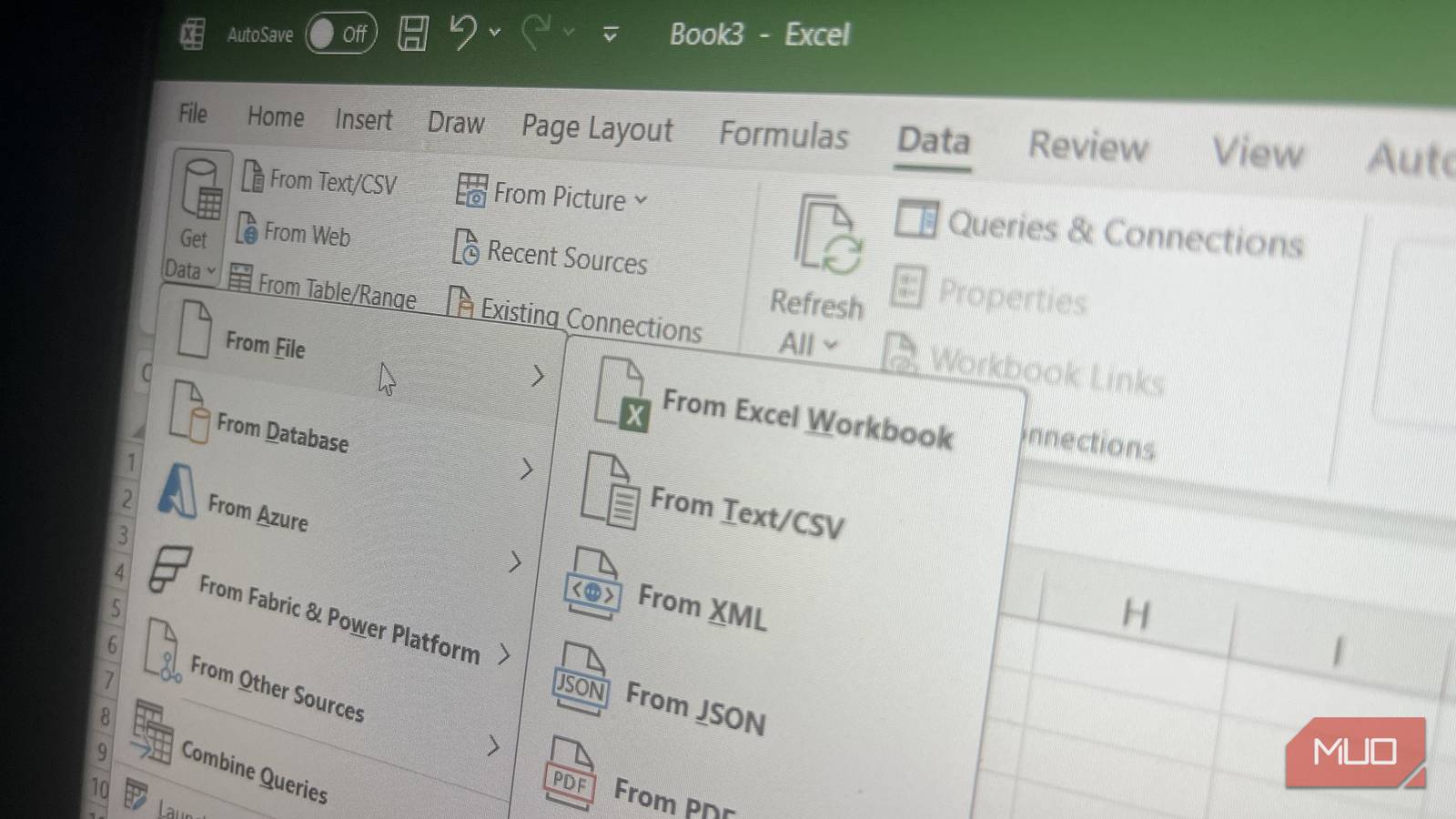

In response to these limitations, we introduce SUTRA (Sanskrit for “thread”), a transformative approach in the architecture of multilingual LLMs. SUTRA uniquely separates the process of concept learning from language learning, as illustrated in Figure 1. SUTRA is a novel multilingual large language model architecture that is trained by decoupling concept learning from language learning. This architecture enables the core model to focus on universal languageagnostic concepts while leveraging specialized neural machine translation (NMT) mechanisms for language-specific processing, thus preserving linguistic nuances without compromising the model’s scalability or performance [Wu et al., 2019]. SUTRA employs a Mixture of Experts (MoE) strategy, enhancing the model’s efficiency by engaging only the relevant experts based on the linguistic task at hand [Shazeer et al., 2017]. Furthermore, SUTRA models are internet-connected and hallucination-free models that understand queries, browse the web, and summarize information to provide the most current answers, without loosing their multilingual capabilities. A combination of multilingual skills, online connectivity, and efficiency in language generation incorporated by SUTRA models promises to redefine the landscape of multilingual language modeling.

In the subsequent sections, we will outline the architecture of SUTRA, our training methodology, and present a comprehensive evaluation that demonstrates its superiority over contemporary multilingual models on several benchmarks, including the Massive Multitask Language Understanding (MMLU) tasks [Hendrycks et al., 2021]. By effectively decoupling concept learning from language processing, SUTRA sets a new paradigm in the development of LLMs, promising broader accessibility and enhanced performance across diverse linguistic landscapes.

The paper is organized as follows: First, we discuss related work in the context of SUTRA. Next, we describe the architecture and training methodology adopted. We then discuss the data used for training and provide both an evaluation of SUTRA’s multilingual as well as online cabailities. Finally, we discuss how to build more inclusive LLMs for the benefit of a wider community.