The ability to run Large Language Models (LLMs) locally has transformed AI development, especially for those of us on macOS. It brings unparalleled privacy, cost control, and offline capabilities. But to truly harness this power in 2025, you need more than just a model file; you need a robust toolkit. Whether you’re building AI-powered applications, fine-tuning prompts, or experimenting with Retrieval Augmented Generation (RAG), having the right local setup is key.

Forget relying solely on cloud APIs with their ticking meters and data privacy question marks. Let’s dive into 7 essential tools that will supercharge your local LLM development workflow on macOS this year, making it more efficient, powerful, and enjoyable.

1. Ollama: Your Gateway to Local LLMs

What it is:

Ollama is an open-source tool that dramatically simplifies downloading, setting up, and running the latest open-source LLMs (like Llama 3, Mistral, Gemma 2, Phi-3, and many more) directly on your machine. It provides a simple command-line interface and a local API endpoint.

Why it’s Essential:

Ollama is the engine that makes local LLM experimentation accessible. It abstracts away much of the complexity of model management, allowing you to quickly pull and switch between different models. For AI developers on macOS, it’s the foundational piece for most local LLM work.

Workflow Impact:

Get from zero to prompting a powerful local LLM in minutes. Easily test different model architectures and sizes for your specific needs. Its local API allows seamless integration with your custom applications.

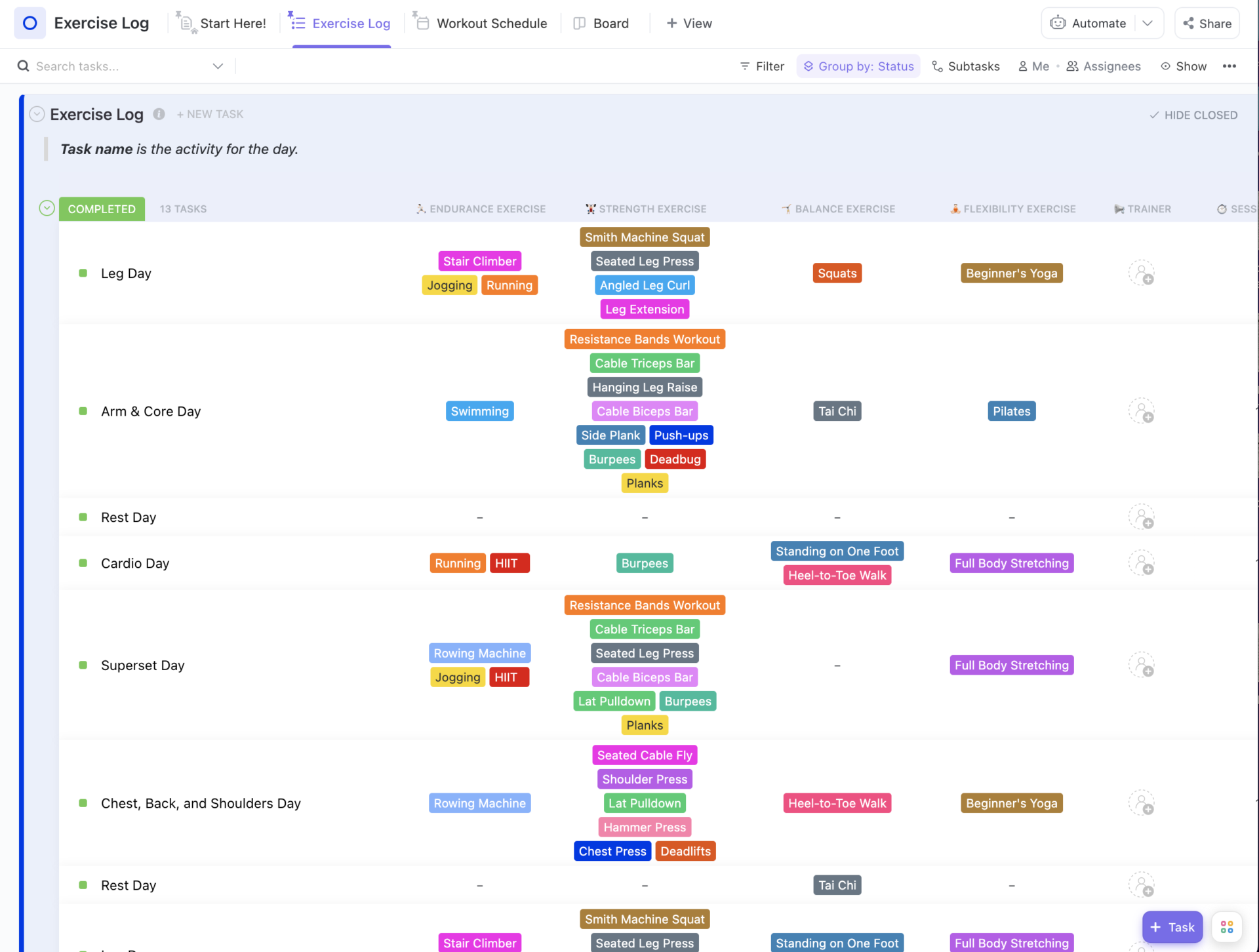

2. ServBay: The Unified Local Development Environment

What it is:

While Ollama runs the models, ServBay is an all-in-one local development environment for macOS that manages Ollama and all the other services your AI application might need. This includes multiple versions of Python, Node.js, databases like PostgreSQL or MySQL, web servers (Nginx, Caddy), and more, all through a clean GUI.

Why it’s Essential:

AI applications rarely exist in a vacuum. You’ll often need a Python backend (e.g., Flask/Django) or a Node.js API to interact with your Ollama-served LLM, perhaps a database to store results or context for RAG. ServBay ensures these services run harmoniously with specific versions, without conflicts, and are easy to manage alongside Ollama.

Workflow Impact:

One-click setup for Ollama within a broader, managed dev environment. Easily switch PHP, Python, or Node.js versions for different AI project components. Manage databases critical for your AI apps. It’s the control panel for your entire local AI stack.

3. VS Code + AI-Powered Extensions: Your Coding Cockpit

What it is:

Visual Studio Code remains a dominant code editor for a reason: it’s fast, flexible, and has an incredible ecosystem of extensions. For AI development in 2025, extensions like GitHub Copilot, specific framework toolkits (e.g., for LangChain or LlamaIndex), and tools that help interact with local LLM APIs are indispensable.

Why it’s Essential:

Writing Python or JavaScript to interact with your local LLMs, building frontends for your AI apps, or crafting intricate prompts all happen here. AI extensions provide intelligent code completion, help debug AI-specific logic, and can even assist in generating test cases or documentation.

Workflow Impact:

Faster code development, better code quality, and easier integration of LLM capabilities into your projects, all within a familiar and powerful editing environment.

4. Open WebUI (or similar): The Interactive LLM Playground

What it is:

While Ollama runs models via CLI, tools like Open WebUI (formerly Ollama WebUI) or other emerging solutions like LibreChat or LobeChat provide a user-friendly, chat-based interface for interacting with your locally running Ollama models. Many support multi-model interaction, RAG features, and prompt management.

Why it’s Essential:

For rapid prompt engineering, testing model responses, and general interaction without writing code for every query, a dedicated UI is invaluable. These tools often offer features beyond what the basic Ollama CLI provides, such as conversation history, model switching on the fly, and sometimes even document interaction for RAG.

Workflow Impact:

Significantly speeds up prompt iteration and model evaluation. Allows for easier demonstration of local LLM capabilities. Many support RAG by allowing you to chat with your documents.

5. ChromaDB / LanceDB: Your Local Vector Store for RAG

What it is:

For building applications with Retrieval Augmented Generation (RAG) — where you provide LLMs with custom knowledge — a vector database is essential. Open-source options like ChromaDB or LanceDB are designed to be lightweight and easy to run locally for development.

Why it’s Essential:

RAG is a dominant pattern for making LLMs more useful with specific, private data. These local vector databases allow you to create, store, and query embeddings from your documents entirely on your Mac, ensuring your knowledge base remains private.

Workflow Impact:

Develop and test RAG pipelines from end-to-end locally. Easily experiment with different embedding strategies and document chunking without relying on cloud-based vector DBs during the dev phase. ServBay can help manage the Python environment needed to run these.

6. Bruno / Postman: Mastering Local LLM APIs

What it is:

Your local LLMs (via Ollama) expose an API (usually on localhost:11434). Tools like the open-source Bruno or the well-known Postman are essential for testing these local API endpoints, crafting requests, and inspecting responses. This is also crucial if you’re building your own API around an LLM.

Why it’s Essential:

Provides a structured way to interact with the LLM’s API for more complex tasks, batch processing, or when developing an application that will programmatically call the LLM. Bruno’s Git-friendly approach is a bonus for versioning your API call collections.

Workflow Impact:

Efficiently debug your local LLM API interactions, automate testing of different prompts or parameters, and ensure your application’s backend can communicate correctly with the local AI model.

7. Git & GitHub/GitLab: Versioning Your AI Masterpieces

What it is:

The undisputed champions of version control. For AI projects, Git (and platforms like GitHub or GitLab) isn’t just for code; it’s crucial for tracking prompts, model configurations, datasets (or pointers to them), and experimentation notebooks.

Why it’s Essential:

Reproducibility is key in AI. Version controlling your prompts, parameters, and the code that orchestrates your LLM interactions allows you to track experiments, revert to previous versions, and collaborate effectively.

Workflow Impact:

Provides a safety net for experimentation. Facilitates teamwork on AI projects. Helps document the evolution of your prompts and model configurations.

Developing with LLMs locally on your Mac in 2025 is incredibly empowering. By combining the direct model access of Ollama with the robust environment management of ServBay, a powerful editor like VS Code, a good LLM UI, a local vector database for RAG, API testing tools, and solid version control, you create a private, cost-effective, and highly productive AI development stack.

This toolkit allows you to move from idea to AI-powered application efficiently, all while keeping your data secure and your workflow streamlined on your macOS machine.

What are your go-to tools for local LLM development? Share your favorites in the comments!