Table of Links

Abstract and 1. Introduction

2 Think-and-Execute

3 Experimental Setup

4 Results

5 Analysis

6 Related Work

7 Limitations and Discussion

8 Conclusion and References

A Experimental Details

B Details of Think-and-Execute

C Prompts Used in Our Experiments

D Human-written Pseudocode Prompts

E Generated Analyses

F Generated Pseudocode Prompts

G Qualitative Analysis

4 Results

4.1 THINK-AND-EXECUTE Improves Algorithmic Reasoning

We start by comparing our framework with direct prompting and zero-shot CoT Kojima et al. (2022) in Table 1. We find that zero-shot CoT performs better than direct prompting with average improvements of 11.1% with GPT-3.5-Turbo, respectively, suggesting zero-shot CoT to be a strong baseline. Our THINK-AND-EXECUTE, however, further outperforms both of them significantly regardless of model sizes, which indicates that explicitly generating a plan is an effective way to improve the LLM’s reasoning capabilities than simply encouraging LLMs to generate their intermediate reasoning steps.

4.2 Task-level Pseudocode Prompts Benefits a Wider Range of Algorithmic Reasoning Tasks than Instance-specific Python Code

In Table 1, PoT shows performance gains in some tasks over direct prompting (e.g., Navigate; Tracking Shuffled Objects) with Python code generated specifically for each instance and the corresponding interpreter output as the answer. However, such improvement is difficult to generalize to all tasks, e.g., 0.4% accuracy in both Dyck Language and Temporal Sequences, with GPT-3.5-Turbo. By contrast, THINK-AND-EXECUTE outperforms PoT and direct prompting in all tasks with GPT-3.5-Turbo. This suggests that making the task-level

strategy with pseudocode and applying it to each instance can benefit LLM’s reasoning in a wider range of algorithmic reasoning tasks than generating instance-specific Python codes.

4.3 The Logic Discovered by an LLM can be Transferred to SLMs

We further explore if the pseudocode prompt written by an LLM (i.e., GPT-3.5-Turbo as the instructor) can be applied to smaller LMs: the CodeLlama family in Table 1. When applying the pseudocode prompts generated by GPT-3.5-Turbo, CodeLlama-7B and -13B significantly outperform direct prompting. Moreover, THINK-AND-EXECUTE with CodeLlama-13B shows comparable performance with GPT-3.5-Turbo with PoT and direct prompting.

4.4 Pseudocode Better Describes the Logic for Solving a Task than Natural Language

We also compare our approach with NL planning, a variant of ours that utilizes natural language to write the task-level instruction, instead of pseudocode. In practice, we provide human-written NL plans that contain a similar amount of information to P in the meta prompt and use it to generate the task-level NL plan for the given task. Surprisingly, although the LMs are fine-tuned to follow natural language instructions, we find that task-level pseudocode prompts can boost their performance more than NL plans (Table 1).

4.5 Ablation Studies

Components of the pseudocode prompt. We conduct an ablation study on each component of the pseudocode prompt. For that, we prepare four types of pseudocode prompts: (1) Human-written pseudocode; (2) Human-written prompt w/o comments and semantics by removing the comments that explain the code and replacing variable names with meaningless alphabets, such as X, Y, and Z; (3) Human-written prompt w/ for loop and (4) w/ intermediate print() statements. The results are in Figure 3. Model performance decreases significantly when applying prompts w/o comments and semantics, especially in Temporal Sequences. This implies that semantics play an important role in guiding the LLMs to apply the discovered logic and reasoning with it accordingly. Also, we find that printing out the intermediate execution steps with print() is crucial in reasoning, which is consistent with the finding from Wei et al. (2022).

Generating the analysis before the pseudocode prompt. Table 2 shows a notable decrease in model performance when generating pseudocode prompts without conducting the

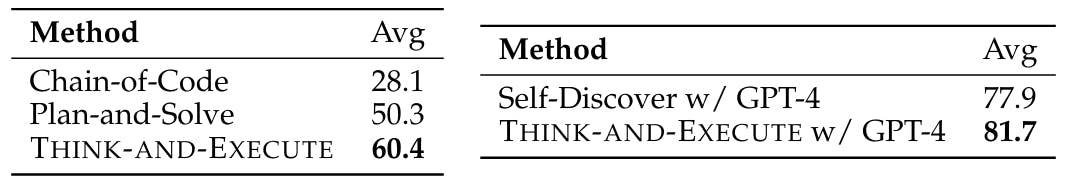

4.6 Comparison with other Baselines

We further compare THINK-AND-EXECUTE with another three baselines: (1) Plan-andSolve (Wang et al., 2023), where an LLM sequentially generates a natural language plan for solving the given instance, step-by-step reasoning according to the plan, and the final answer; (2) Chain-of-Code (Li et al., 2023), where Python code is generated as a part of intermediate reasoning steps specifically for a given instance; (3) Self-Discover (Zhou et al., 2024), a concurrent work that devises a task-level reasoning structure in a JSON format before inferencing the instance. First, as presented in Table 3 (Left), we find THINK-AND-EXECUTE largely outperforms Plan-and-Solve and Chain-of-Code by 10.9 and 32.3 percentage points in terms of accuracy, respectively. Second, while Self-Discover also incorporate task-level instruction, in Table 3 (Right), our THINK-AND-EXECUTE with pseudocode prompts shows better performance when using GPT-4 (Achiam et al., 2023).[3] These findings indicate that generating (1) task-level instruction with (2) pseudocode can better represent the necessary logic for solving a task and benefit LLM’s algorithmic ability

[3] We use gpt-4-0613 for GPT-4.

Authors:

(1) Hyungjoo Chae, Yonsei University;

(2) Yeonghyeon Kim, Yonsei University;

(3) Seungone Kim, KAIST AI;

(4) Kai Tzu-iunn Ong, Yonsei University;

(5) Beong-woo Kwak, Yonsei University;

(6) Moohyeon Kim, Yonsei University;

(7) Seonghwan Kim, Yonsei University;

(8) Taeyoon Kwon, Yonsei University;

(9) Jiwan Chung, Yonsei University;

(10) Youngjae Yu, Yonsei University;

(11) Jinyoung Yeo, Yonsei University.