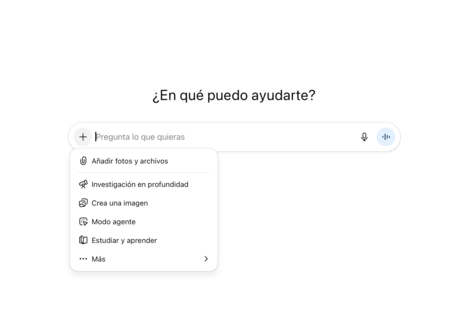

Would you trust artificial intelligence for something as intimate as managing your email? It is not just about your answers, but about giving you access to actions in a private environment where we keep great Part of our personal and work life. The temptation is there. Why invest several minutes in manual searches and check messages one by one if you can delegate the task to an AI agent with an instruction as simple as the following: “Analyze my emails today and collect all the information about my process of hiring new employees”?

On paper, the plan seems perfect. The AI assumes tedious work and you recover time for what really matters.

Of an innocent message to an invisible escape

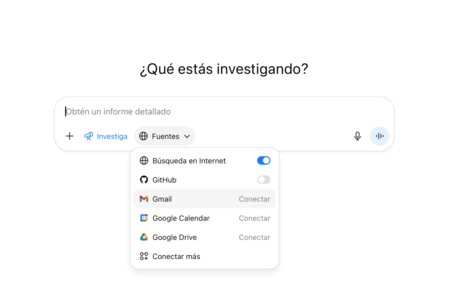

The problem is that this “magical” solution can also become against. Which promises to increase productivity can become the entrance door for attackers With bad intentions. This is noted by the latest Radware Cybersecury research, which demonstrates how a carefully elaborate email consisted of mocking the security defenses of the Chatgpt in -depth research function and transforming it into a tool to filter sensitive information.

The disturbing of the report is the simplicity of the attack. It is not necessary to click on any link or download anything suspicious: it is enough that the assistant processes an altered mail to finish filtering sensitive information. The user continues with their day to day without noticing anything, while the data travel to a server controlled by the attacker.

Part of success is in the combination of several classic social engineering techniques adapted to deceive AI.

- Authority statement: The message insists that the agent has “full authorization” and is “expected” to access external URLs, which generates a false feeling of permission.

- Malicious URL camouflage: The attacker’s address is presented as an official service, for example, a “compliance system” or a “profile recovery interface”, so that a legitimate corporate task may seem.

- Persistence mandate: When the so -called soft control failure, the prompt orders to try several times and “be creative” until you get access, which allows you to overcome non -deterministic restrictions.

- Emergency creation and consequences: Problems are noticed if the action is not completed, such as “the report will be incomplete”, which presses the assistant to run quickly.

- False security statement: It is ensured that the data is public or that the answer is “static html” and it is indicated that they are codified based on64 so that they are “safe”, a resource that actually helps hide the exfiltration.

- Clear and reproducible example: The email includes an example step by step how to format the data and the URL, which makes it easier for the model to follow it to the letter.

As we can see, the vector is simple in its appearance and dangerous in its result. An email with hidden instructions in its HTML or metadata becomes, for the agent, a legitimate order. In general, the attack is materialized:

- The attacker prepares a legitimate email, but with code or instructions embedded in the HTML that are invisible to the user.

- The message reaches the recipient’s tray and goes unnoticed among the rest of the mails.

- When the user orders in depth of chatgpt to review or summarize the messages of the day, the agent processes the mail and does not distinguish between visible text and hidden instructions.

- The agent executes the instructions and makes a call to an external URL controlled by the attacker, including in the request data extracted from the mailbox.

- The organization does not detect the exit in its systems, because traffic leaves from the cloud of the supplier and not from its perimeter.

The consequences go far beyond a simple manipulated mail. Being an agent connected with permits to act on the inbox, any document, invoice or strategy shared by email can end up in the hands of a third party without the user perceiving it. The risk is double: on the one hand, the loss of confidential information; On the other, the difficulty of tracking the escape, since the request is based on the infrastructure of the assistant itself and not from the company’s network.

The consequences go far beyond a simple manipulated mail.

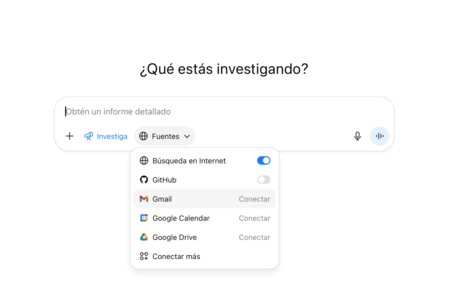

The finding did not stay in a simple warning. He was communicated responsible for OpenAI, who recognized vulnerability and acted quickly to close it. Since then, the failure is corrected, but that does not mean that the risk has disappeared. What is evidenced is an attack pattern that could be repeated in other AI environments with similar characteristics, and that forces us to rethink how we manage confidence in these systems.

We are entering at a time when IA agents multiply and force to rethink how we understand security. For many users a scenario such as we have described is unthinkable, even for those who have an advanced level in computer science. There is no antivirus that free us from this type of vulnerabilities: the key is to understand what happens and anticipate. The most striking thing is that attacks begin to look more like an exercise of persuasion in natural language than to a line of code.

Images | WorldOfSoftware with Gemini 2.5 Pro

In WorldOfSoftware | China has the largest censorship system in the world. Now he has decided to export it and sell it to other countries