Table of Links

- Abstract and Introduction

- Related Work

- Feedback Mechanisms

- The NewsUnfold Platform

- Results

- Discussion

- Conclusion

- Acknowledgments and References

A. Feedback Mechanism Study Texts

B. Detailed UX Survey Results for NewsUnfold

C. Material Bias and Demographics of Feedback Mechanism Study

D. Additional Screenshots

6 Discussion

Feedback Mechanisms Study

Although feedback was optional, monetary incentives and a structured study setting prompted participants to share opinions on all highlights, raising questions about engagement in settings without such incentives. Initially, we assumed the Comparison method reduced anchoring bias and increased critical thinking. However, F1 scores between Highlights and Comparison disprove this. Both F1 and IAA scores were expectantly low as media bias perception is highly subjective, and comparable approaches report similar scores (Spinde et al. 2021c; Hube and Fetahu 2019; Recasens, Danescu-Niculescu-Mizil, and Jurafsky 2013). Comparison was less efficient than Control, possibly due to managing two questions simultaneously. Interestingly, the Highlights method led to longer engagement times, indicating a mix of focused attention and prolonged article interaction, possibly enhancing contextual critical thinking.

Further, Table 5 highlights issues with the training data for BABE,[17] treated as the ground truth. Discrepancies between expert labels suggest that BABE may not be entirely accurate, especially since the dataset often misclassifies subtly biased sentences as ”not biased”. However, achieving complete accuracy in bias classification may be unattainable due to the subjective nature of bias and the misleading concept of a single, absolute ground truth (Xu and Diab 2024).

NewsUnfold

NewsUnfold showcases how the feedback mechanism gathers bias annotations in a news-reading environment. The system increases IAA by 26.31%, achieves high agreement with expert labels, and corrects misclassifications through feedback. For example, this sentence was initially deemed non-biased but corrected to biased:

”That level of entitlement is behind Democrats’ slipping control on black voters, as demonstrated by 2020 exit polls showing that, for example, just 79% of black men voted for Biden, a percentage that has been dropping since 2012.”

Despite having a lower label count than BABE, the feed- back dataset demonstrates greater agreement with expert labels. Furthermore, statistical analysis indicates that the improvement in IAA cannot be attributed solely to the annotation count Section 5. Although the increase in the dataset size likely drives the rise in F1-Score, the data has been shown to be reliable. This suggests that readers using the feedback mechanism (2 in Figure 4) offer a reliable alternative to costly expert annotators, facilitating the collection of more extensive data sets. The scarcity of high-quality media bias datasets highlights the need to integrate feedback mechanisms on NewsUnfold or other digital and social platforms. Likewise, other classifiers, such as misinformation classifiers, can use similar mechanisms to gather data and improve accuracy while augmenting the cognitive abilities of readers (Pennycook and Rand 2022; Spinde et al. 2022).

Limited written feedback through the feedback mecha- nism ( in Figure 4) might be due to typing disruptions during reading. Most feedback highlighted false negatives, indicating it is simpler to spot bias than explain its absence. Currently, nuances are not well-captured by the binary feed- back in the first iteration, as all design decisions are a trade-off between an effortless process that drives engagement and more complex labeling. While more friction can foster deeper thinking, we decided on a simple, binary feedback version (3 in Figure 4). Scales similar to Karmakharm, Aletras, and Bontcheva (2019) could turn binary feedback into a spectrum and include multiple scales for other biases.

Although direct quotes can exhibit bias, they do not inherently impact neutrality (Recasens, Danescu-Niculescu- Mizil, and Jurafsky 2013). In our dataset, we observe significant disagreements regarding quotes (Section 5), indicating confusion among readers regarding their interpretation. Therefore, future iterations should incorporate different visual cues for quotes and may consider excluding them from the bias indication and training dataset.

Expanding the data collection phase could have enlarged the dataset but potentially bear design flaws. Hence, we decide to follow a user-centric design approach with a short collection phase to allow for quick iterations of feedback while showcasing data quality capabilities early on. While the RoBERTa model fine-tuned with BABE was used, NewsUnfold could have tested other models. However, RoBERTa performed superior in a previous study (Spinde et al. 2021b).

A common challenge in projects that rely on community contributions is keeping volunteers motivated over time (Soliman and Tuunainen 2015). With NewsUnfold, we aim to increase motivation by highlighting bias in a news reading application, offering a reason for people to use the plat- form that goes beyond annotation. The project targets reader groups similar to those interested in AllSides and Ground- News, which have demonstrated the viability of such business concepts. For testing and iterative feedback, we opted for a binary approach, feasible with our resources at the time, predicated on the assumption that feedback would primarily come from individuals valuing unbiased information. In later versions, NewsUnfold will incorporate insights from a recent literature review on media bias detection and mitigation (Xu and Diab 2024). The authors suggest accounting for cultural and group backgrounds in label creation and output generation. By adapting its output to readers’ backgrounds, NewsUnfold could extend its appeal beyond those specifically seeking unbiased information. Gamification elements or unlocking additional content through giving feedback could further increase motivation (Zeng, Tang, and Wang 2017).

The use cases of the feedback mechanisms extend beyond NewsUnfold. Any digital and social media that includes text can apply the feedback mechanism to raise readers’ aware- ness and collect feedback. NewsUnfold, as an application, integration, or browser plug-in, could offer an alternative to traditional news platforms. Incorporating feedback mechanisms with customizable classifiers, such as those for detecting misinformation, stereotypes, emotional language, generative content, or opinions, could allow users to analyze the content they consume in greater detail. Simultaneously, they contribute to a community dataset with an open-source purpose, which has shown potential in other applications (Cooper et al. 2010). We believe that by offering something useful, the feedback mechanism on NewsUnfold can gather valuable information in the long run, even if readers do not interact with it daily.

While systems like NewsUnfold can help understand bias and language, educate readers, and foster critical reading, we must closely monitor data quality and include readers’ backgrounds while meeting data protection standards. Bias in the reader base or attacks from malicious groups, for example, any politically extreme group interested in shifting the classifier according to their ideology, could lead to a self-enforcing loop that inserts bias and skews classifier results towards a specific perspective, potentially harming minorities. To avoid deliberate attacks, we include spammer detection before training. In the future, we will monitor feedback beyond F1 scores and IAA as they only capture the agreement between raters, which is a standard measure in the media bias domain but does not fully indicate the quality of annotations. They help us set a baseline to check how bias detection systems handle human feedback, backed up by the manual analysis and NewsUnfold’s ease of use.Other possibilities include a soft labeling approach (Fornaciari et al. 2021), adding adversarial examples into the training data, (Goyal et al. 2023), employing a HITL approach where experts try to break the model (Wallace et al. 2019), identifying and correcting perturbations (Goyal et al. 2023), or using a more complex probabilistic model for label generation (Law and von Ahn 2011).

Making the system and process transparent is critical to avoid misuse. Given the potential impact of skewed or misclassified bias highlights on reader perceptions, the system must communicate the impossibility of achieving absolute accuracy. Hence, the landing page informs readers about the possible inaccuracy to impart a clear understanding of classification limitations and ask for readers’ help. We believe that even with the classification improvements of large language models such as GPT, assessing human perception of bias will always be crucial, and feedback mechanisms to assess such perception are becoming more critical for developing and constantly evaluating fair AI.

Limitations

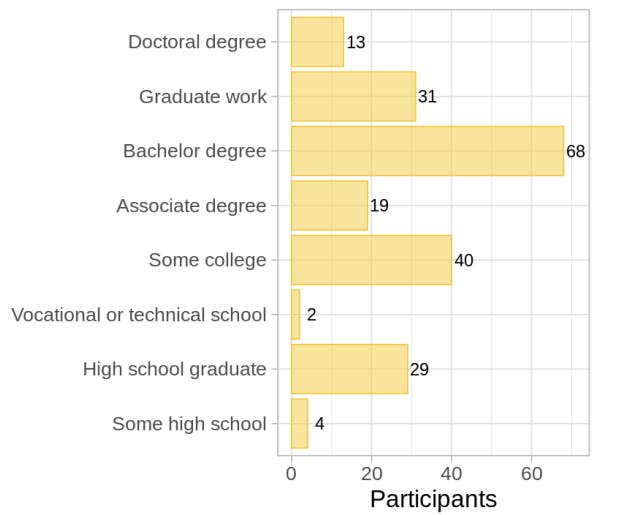

Our team’s Western education might add bias. Similarly, the Prolific study and the recruitment for NewsUnfold via LinkedIn might skew results due to the presence of more academic participants. The age range and education (Figure 8) of participants in Section 3 suggests a bias towards the digitally accustomed and educated, additional to a left slant (Figure 12). Although both studies involve relatively small samples, the results are nevertheless significant. Future re- search should examine larger and more diverse samples to evaluate how varying backgrounds and political orientations influence feedback behavior and quality. We implemented the feedback mechanism on the NewsUnfold platform to test if readers would give feedback in an environment as close as possible to a real news aggregator. Hence, we decided against a demographic survey to collect annotator data as it might negatively impact readers’ experience. The more open study setting (compare Spinde et al. 2021c,d), with users exploring NewsUnfold freely, complicates the identification of factors affecting data quality. While the goal is to gather data from diverse readers, NewsUnfold currently controls for geographical diversity. Unlike the US pre-study, NewsUnfold had significant participation from Japan and Germany. How- ever, readers’ backgrounds and quality control tasks must be implemented in later iterations, for example, by implementing user accounts. Their data can be used to improve models (Law and von Ahn 2011) and fair classifiers (Cabitza, Campagner, and Basile 2023) accounting for backgrounds and protecting minorities or underprivileged groups.

We did not collect demographic data or ask participants which device they used to view NewsUnfold in the UX study. studies need to control for situations, experiences, attention, and perceptions of bias, which could di- verge depending on personal backgrounds and the device used.

Future Work

We plan to develop NewsUnfold into a standalone website with constantly updated content.

Simultaneously, we aim to evaluate different feedback mechanisms for media bias classifiers and to extend our design’s application beyond NewsUnfold. We plan to implement and test the feedback tool (2 in Figure 4) as a browser plugin[18] and social media integration. The value of the feedback mechanism lies in its adaptability across different platforms, using visual cues to enhance datasets for various bias types. Social media websites can add similar mechanisms as extensions highlighting biased language to make users more aware of potential biases and their influence (Spinde et al. 2022). Our next phase involves testing its integration in social media environments like X.

We will monitor the impact on user behavior in the long term and explore gamification and designs to increase en- gagement (Wiethof, Roocks, and Bittner 2022). Also, we will assess varied bias indicators, such as credibility cues (Bhuiyan et al. 2021), which have shown to be effective in similar studies (Yaqub et al. 2020; Kenning, Kelly, and Jones 2018), but need real-world validation. We further plan to add labels for subtypes of bias (Spinde et al. 2024). Advanced models like LLaMA (Touvron et al. 2023), BLOOM (Workshop et al. 2023), and GPT-4 (OpenAI 2023) may offer additional explanations on bias highlights (1 in Figure 4). When both models and data collection improve, it facilitates finding and comparing different outlets’ coverage of topics and views of the general population. Simultaneously, controlling for personal backgrounds (Groeling 2013) could assist journalists and researchers in studying and understanding media bias, as well as its formation and expression over time.

7 Conclusion

We present NewsUnfold, a HITL news-reading application that visually highlights media bias for data collection. It augments an existing dataset via a previously evaluated feed- back mechanism, improving classifier performance and surpassing the baseline IAA while integrating a UX study. NewsUnfold showcases the potential for diverse data collection in evolving linguistic contexts while considering human factors.

8 Acknowledgments

This work was supported by the Hanns-Seidel Foundation (https://www.hss.de/),) the German Academic Exchange Service (DAAD) (https://www.daad.de/de/), the Bavarian State Ministry for Digital Affairs in the project XR Hub (Grant A5-3822-2-16), and partially supported by JST CREST Grant JPMJCR20D3 Japan. None of the funders played any role in the study design or publication-related decisions. ChatGPT was used for proofreading.

References

An, J.; Cha, M.; Gummadi, K.; Crowcroft, J.; and Quercia, D. 2021. Visualizing Media Bias through Twitter. Proceedings of the International AAAI Conference on Web and Social Media, 6(2): 2–5.

Ardevol-Abreu, A.; and Zuniga, H. G. 2017. Effects of Editorial Media Bias Perception and Media Trust on the Use of Traditional, Citizen, and Social Media News. Journalism & Mass Communication Quarterly, 94(3): 703–724.

Baumer, E. P. S.; Polletta, F.; Pierski, N.; and Gay, G. K. 2015. A Simple Intervention to Reduce Framing Effects in Perceptions of Global Climate Change. Environmental Communication, 11(3): 289-310.

Bhuiyan, M. M.; Horning, M.; Lee, S. W.; and Mitra, T. 2021. NudgeCred: Supporting news credibility assessment on social media through nudges. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW2): 1–30.

Brew, A.; Greene, D.; and Cunningham, P. 2010. Using Crowdsourcing and Active Learning to Track Sentiment in Online Media. In Proceedings of the 2010 Conference on ECAI 2010: 19th European Conference on Artificial Intelligence, 145–150. NLD: IOS Press. ISBN 9781607506058.

Cabitza, F.; Campagner, A.; and Basile, V. 2023. Toward a Perspectivist Turn in Ground Truthing for Predictive Computing. Proceedings of the AAAI Conference on Artificial Intelligence, 37(6): 6860–6868.

Cooper, S.; Khatib, F.; Treuille, A.; Barbero, J.; Lee, J.; Beenen, M.; Leaver-Fay, A.; Baker, D.; Popovic´, Z.; and Players, F. 2010. Predicting protein structures with a multiplayer online game. Nature, 466(7307): 756–760.

Demartini, G.; Mizzaro, S.; and Spina, D. 2020. Human-in- the-loop Artificial Intelligence for Fighting Online Misinformation: Challenges and Opportunities. IEEE Data Eng. Bull., 43: 65–74.

Draws, T.; Rieger, A.; Inel, O.; Gadiraju, U.; and Tintarev, N. 2021. A Checklist to Combat Cognitive Biases in Crowd- sourcing. Proceedings of the AAAI Conference on Human Computation and Crowdsourcing, 9: 48–59.

Druckman, J. N.; and Parkin, M. 2005. The Impact of Media Bias: How Editorial Slant Affects Voters. The Journal of Politics, 67(4): 1030–1049.

Eberl, J.-M.; Boomgaarden, H. G.; and Wagner, M. 2017. One Bias Fits All? Three Types of Media Bias and Their Effects on Party Preferences. Communication Research, 44(8): 1125–1148.

Eveland Jr., W. P.; and Shah, D. V. 2003. The Impact of Individual and Interpersonal Factors on Perceived News Media Bias. Political Psychology, 24(1): 101–117.

Feldman, L. 2011. Partisan Differences in Opinionated News Perceptions: A Test of the Hostile Media Effect. Political Behavior, 33(3): 407–432.

Fornaciari, T.; Uma, A.; Paun, S.; Plank, B.; Hovy, D.; and Poesio, M. 2021. Beyond Black & White: Leveraging Annotator Disagreement via Soft-Label Multi-Task Learning. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 2591–2597. Online: Association for Computational Linguistics.

Furnham, A.; and Boo, H. C. 2011. A literature review of the anchoring effect. The Journal of Socio-Economics, 40(1): 35–42.

Goyal, S.; Doddapaneni, S.; Khapra, M. M.; and Ravindran,

B. 2023. A Survey of Adversarial Defenses and Robustness in NLP. ACM Computing Surveys, 55(14s): 332:1–332:39.

Groeling, T. 2013. Media Bias by the Numbers: Challenges and Opportunities in the Empirical Study of Partisan News. Annual Review of Political Science, 16(1): 129–151.

Hayes, A. F.; and Krippendorff, K. 2007. Answering the Call for a Standard Reliability Measure for Coding Data. Communication Methods and Measures, 1(1): 77–89.

He, Z.; Majumder, B. P.; and McAuley, J. 2021. Detect and Perturb: Neutral Rewriting of Biased and Sensitive Text via Gradient-based Decoding. In Findings of the Association for Computational Linguistics: EMNLP 2021, 4173–4181. Association for Computational Linguistics.

Hube, C.; and Fetahu, B. 2019. Neural Based Statement Classification for Biased Language. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, WSDM ’19, 195–203. Association for Computing Machinery. ISBN 978-1-4503-5940-5.

Jakesch, M.; Bhat, A.; Buschek, D.; Zalmanson, L.; and Naaman, M. 2023. Co-Writing with Opinionated Language Models Affects Users’ Views. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI ’23. New York, NY, USA: Association for Computing Machinery. ISBN 9781450394215.

Karmakharm, T.; Aletras, N.; and Bontcheva, K. 2019. Journalist-in-the-Loop: Continuous Learning as a Service for Rumour Analysis. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Lan- guage Processing (EMNLP-IJCNLP): System Demonstrations, 115–120. Hong Kong, China: Association for Computational Linguistics.

Kause, A.; Townsend, T.; and Gaissmaier, W. 2019. Framing Climate Uncertainty: Frame Choices Reveal and Influ- ence Climate Change Beliefs. Weather, Climate, and Soci- ety, 11(1): 199–215.

Kenning, M. P.; Kelly, R.; and Jones, S. L. 2018. Supporting Credibility Assessment of News in Social Media Using Star Ratings and Alternate Sources. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, 1–6. ACM. ISBN 978-1-4503-5621-3.

Law, E.; and von Ahn, L. 2011. Human computation. Springer International Publishing. ISBN 978-3-031-01555- 7.

Lee, N.; Bang, Y.; Yu, T.; Madotto, A.; and Fung, P. 2022. NeuS: Neutral Multi-News Summarization for Mitigating Framing Bias. arxiv.

Liu, R.; Wang, L.; Jia, C.; and Vosoughi, S. 2021. Political Depolarization of News Articles Using Attribute-aware Word Embeddings. arxiv.

Mavridis, P.; Jong, M. d.; Aroyo, L.; Bozzon, A.; Vos, J. d.; Oomen, J.; Dimitrova, A.; and Badenoch, A. 2018. A hu- man in the loop approach to capture bias and support media scientists in news video analysis. In Proceedings of the 1st workshop on subjectivity, ambiguity and disagreement in crowdsourcing, and short paper proceedings of the 1st work- shop on disentangling the relation between crowdsourcing and bias management, volume 2276 of CEUR workshop proceedings, 88–92. CEUR-WS.

Mosqueira-Rey, E.; Hernandez-Pereira, E.; Alonso-R´ıos, D.; Bobes-Bascaran, J.; and Fernandez-Leal, A. 2022. Human- in-the-Loop Machine Learning: A State of the Art. Artificial Intelligence Review.

OpenAI. 2023. GPT-4 Technical Report. arxiv.

Otterbacher, J. 2015. Crowdsourcing Stereotypes: Linguistic Bias in Metadata Generated via GWAP. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI ’15, 1955–1964. New York, NY, USA: Association for Computing Machinery. ISBN 978-1- 4503-3145-6.

Park, S.; Kang, S.; Chung, S.; and Song, J. 2009. News- Cube: Delivering Multiple Aspects of News to Mitigate Media Bias. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 443–452.

Pennycook, G.; and Rand, D. G. 2022. Accuracy prompts are a replicable and generalizable approach for reducing the spread of misinformation. Nature Communications, 13(1): 2333.

Powers, D. M. W. 2008. Evaluation: From Precision, Recall and F-Factor to ROC, Informedness, Markedness & Correlation. Mach. Learn. Technol., 2.

Pryzant, R.; Diehl Martinez, R.; Dass, N.; Kurohashi, S.; Ju- rafsky, D.; and Yang, D. 2020. Automatically Neutralizing Subjective Bias in Text. Proceedings of the AAAI Confer- ence on Artificial Intelligence, 34(01): 480–489.

Raykar, V. C.; and Yu, S. 2011. Ranking Annotators for Crowdsourced Labeling Tasks. In Proceedings of the 24th International Conference on Neural Information Processing Systems, NIPS’11, 1809–1817. Red Hook, NY, USA: Cur-

Raza, S.; Reji, D. J.; and Ding, C. 2022. Dbias: detecting biases and ensuring fairness in news articles. International Journal of Data Science and Analytics.

Recasens, M.; Danescu-Niculescu-Mizil, C.; and Jurafsky, D. 2013. Linguistic models for analyzing and detecting biased language. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 1650–1659.

Ribeiro, F. N.; Henrique, L.; Benevenuto, F.; Chakraborty, A.; Kulshrestha, J.; Babaei, M.; and Gummadi, K. P. 2018. Media Bias Monitor : Quantifying Biases of Social Media News Outlets at Large-Scale. In Twelfth International AAAI Conference on Web and Social Media, 290–299. AAAI Press. ISBN 978-1-57735-798-8.

Shaw, A. D.; Horton, J. J.; and Chen, D. L. 2011. Designing Incentives for Inexpert Human Raters. In Proceedings of the ACM 2011 Conference on Computer Supported Cooperative Work, CSCW ’11, 275–284. New York, NY, USA: Association for Computing Machinery. ISBN 9781450305563.

Sheng, V. S.; and Zhang, J. 2019. Machine Learning with Crowdsourcing: A Brief Summary of the Past Research and Future Directions. Proceedings of the AAAI Conference on Artificial Intelligence, 33(01): 9837–9843.

Soliman, W.; and Tuunainen, V. K. 2015. Understanding Continued Use of Crowdsourcing Systems: An Interpretive Study. Journal of Theoretical and Applied Electronic Com- merce Research, 10(1): 1–18.

Spinde, T.; Hamborg, F.; Donnay, K.; Becerra, A.; and Gipp,B. 2020. Enabling News Consumers to View and Under- stand Biased News Coverage: A Study on the Perception and Visualization of Media Bias. In Proceedings of the ACM/IEEE Joint Conference on Digital Libraries in 2020, JCDL ’20, 389–392. Association for Computing Machinery. ISBN 978-1-4503-7585-6.

Spinde, T.; Hamborg, F.; and Gipp, B. 2020. An Integrated Approach to Detect Media Bias in German News Articles. In Proceedings of the ACM/IEEE Joint Conference on Dig- ital Libraries in 2020, JCDL ’20, 505–506. Association for Computing Machinery. ISBN 978-1-4503-7585-6.

Spinde, T.; Hinterreiter, S.; Haak, F.; Ruas, T.; Giese, H.; Meuschke, N.; and Gipp, B. 2024. The Media Bias Taxonomy: A Systematic Literature Review on the Forms and Automated Detection of Media Bias. arXiv:2312.16148.

Spinde, T.; Jeggle, C.; Haupt, M.; Gaissmaier, W.; and Giese, H. 2022. How Do We Raise Media Bias Awareness Effectively? Effects of Visualizations to Communicate Bias. PLOS ONE, 17(4): 1–14.

Spinde, T.; Kreuter, C.; Gaissmaier, W.; Hamborg, F.; Gipp, B.; and Giese, H. 2021a. Do You Think It’s Biased? How To Ask For The Perception Of Media Bias. In Proceed- ings of the ACM/IEEE Joint Conference on Digital Libraries (JCDL).

Spinde, T.; Plank, M.; Krieger, J.-D.; Ruas, T.; Gipp, B.; and Aizawa, A. 2021b. Neural Media Bias Detection Using Distant Supervision With BABE – Bias Annotations By Experts. In Findings of the Association for Computational Linguistics: EMNLP 2021. Dominican Republic.

Spinde, T.; Richter, E.; Wessel, M.; Kulshrestha, J.; and Donnay, K. 2023. What do Twitter comments tell about news article bias? Assessing the impact of news article bias on its perception on Twitter. Online Social Networks and Media, 37-38: 100264.

Spinde, T.; Rudnitckaia, L.; Kanishka, S.; Hamborg, F.; Bela; Gipp; and Donnay, K. 2021c. MBIC – A Media Bias Annotation Dataset Including Annotator Characteristics. Proceedings of the iConference 2021.

Spinde, T.; Sinha, K.; Meuschke, N.; and Gipp, B. 2021d. TASSY – A Text Annotation Survey System. In Proceedings of the ACM/IEEE Joint Conference on Digital Libraries (JCDL).

Stumpf, S.; Rajaram, V.; Li, L.; Burnett, M.; Dietterich, T.; Sullivan, E.; Drummond, R.; and Herlocker, J. 2007. To- ward Harnessing User Feedback for Machine Learning. In Proceedings of the 12th International Conference on Intelligent User Interfaces, IUI ’07, 82–91. New York, NY, USA: Association for Computing Machinery. ISBN 1595934812.

Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozie`re, B.; Goyal, N.; Hambro, E.; Azhar, F.; Rodriguez, A.; Joulin, A.; Grave, E.; and Lample,

Vaccaro, M.; and Waldo, J. 2019. The effects of mixing machine learning and human judgment. Communications of the ACM, 62(11): 104–110.

Vraga, E. K.; and Tully, M. 2015. Media Literacy Messages and Hostile Media Perceptions: Processing of Nonpartisan versus Partisan Political Information. Mass Communication and Society, 18(4): 422–448.

Wallace, E.; Rodriguez, P.; Feng, S.; Yamada, I.; and Boyd- Graber, J. 2019. Trick Me If You Can: Human-in-the-Loop Generation of Adversarial Examples for Question Answer- ing. Transactions of the Association for Computational Linguistics, 7: 387–401.

Weil, A. M.; and Wolfe, C. R. 2022. Individual differences in risk perception and misperception of COVID-19 in the context of political ideology. Applied cognitive psychology, 36(1): 19–31.

Wessel, M.; Horych, T.; Ruas, T.; Aizawa, A.; Gipp, B.; and Spinde, T. 2023. Introducing MBIB – the First Media Bias Identification Benchmark Task and Dataset Collection. In Proceedings of 46th International ACM SIGIR Conference on Research and Development in Information Retrieval (SI- GIR 23). ACM.

Wiethof, C.; Roocks, T.; and Bittner, E. A. C. 2022. Gami- fying the Human-in-the-Loop: Toward Increased Motivation for Training AI in Customer Service. In Artificial Intelli- gence in HCI, 100–117. Cham: Springer International Publishing. ISBN 978-3-031-05643-7.

Workshop, B.; Scao, T. L.; Fan, A.; Akiki, C.; Pavlick, E.; and Ilic´, S. 2023. BLOOM: A 176B-Parameter Open-Access Multilingual Language Model. arxiv.

Xintong, G.; Hongzhi, W.; Song, Y.; and Hong, G. 2014. Brief survey of crowdsourcing for data mining. Expert Systems with Applications, 41(17): 7987–7994.

Xu, J.; and Diab, M. 2024. A Note on Bias to Complete.

Yaqub, W.; Kakhidze, O.; Brockman, M. L.; Memon, N.; and Patil, S. 2020. Effects of Credibility Indicators on Social Media News Sharing Intent. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, 1–14. ACM. ISBN 978-1-4503-6708-0.

Zeng, Z.; Tang, J.; and Wang, T. 2017. Motivation mechanism of gamification in crowdsourcing projects. International Journal of Crowd Science, 1(1): 71–82.

:::info

Authors:

(1) Smi Hinterreiter;

(2) Martin Wessel;

(3) Fabian Schliski;

(4) Isao Echizen;

(5) Marc Erich Latoschik;

(6) Timo Spinde.

:::

:::info

This paper is available on arxiv under CC0 1.0 license.

:::

[17] Students primarily annotated the data.

[18] A future experiment will determine if a plugin ensures quality annotations. However, its platform integration expands reach and diversity, making it our preferred choice.