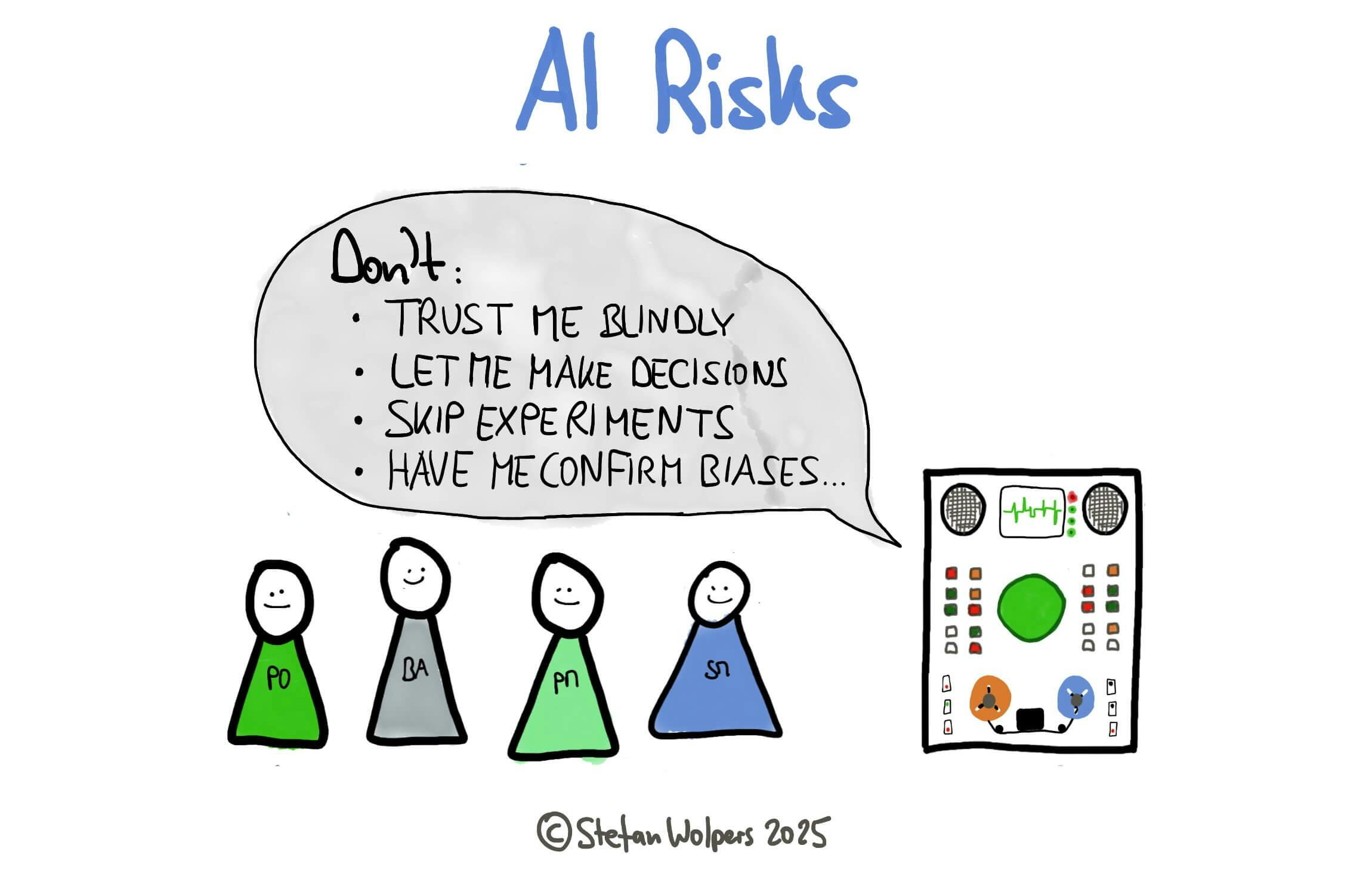

TL; DR: AI Risks — It’s A Trap!

AI is tremendously helpful in the hands of a skilled operator. It can accelerate research, generate insights, and support better decision-making. But here’s what the AI evangelists won’t tell you: it can be equally damaging when fundamental AI risks are ignored.

The main risk is a gradual transfer of product strategy from business leaders to technical systems—often without anyone deciding this should happen. Teams add “AI” and often report more output, not more learning. That pattern is consistent with long-standing human-factors findings: under time pressure, people over-trust automated cues and under-practice independent verification, which proves especially dangerous when the automation is probabilistic rather than deterministic (Parasuraman & Riley, 1997; see all sources listed below). That’s not a model failure first; it’s a system and decision-making failure that AI accelerates.

The article is an extension to the lessons on “AI Risks” of the Agile 4 Agile Online course. The research of sources was supported by Gemini 2.5 Pro.

Three Mechanisms Destroying Product Judgment

Understanding AI risks requires examining the underlying mechanisms that cause these problems, not just cataloging the symptoms.

Mechanism One: Over-Trust → Erosion of Empiricism

Under deadline pressure, probabilistic outputs get treated as facts. Parasuraman and Riley’s established taxonomy—use, misuse, disuse, abuse—predicts this slide from active sense-making to passive acceptance. In product work, this manifests as Product Owners and Product Managers accepting unclear AI recommendations without question, while losing track of how decisions were made and whether they can be verified.

Automation bias represents the most dangerous form of this over-trust. Research consistently shows that people over-rely on automated systems while under-monitoring their performance—a pattern observed across domains from aviation to medical diagnosis (Parasuraman & Manzey, 2010). Teams risk overweighting AI-generated recommendations even when they contradict on-the-ground insights, fundamentally undermining empirical process control.

The corrective is forcing hypotheses back into the process: every AI-influenced decision should yield a three-part artifact: Claim, test, and pre-decided action. This applies hypothesis-driven development to everyday product work, maintaining speed while preserving learning.

Mechanism Two: Optimization Power → Metric Gaming (Goodhart Effects)

When a proxy becomes a target, it ceases to be an effective proxy; optimization amplifies the harm. This is the substance of Goodhart effects in AI settings and the specification-gaming literature, which documents systems that maximize the written objective while undermining the intended outcome (Manheim & Garrabrant, 2018).

DeepMind’s specification gaming research reveals how AI systems find unexpected ways to achieve high scores while completely subverting the intended goal. In product contexts, that is “green dashboards, red customers:” click-through rises while trust or retention falls.

Value validation shortcuts exemplify this mechanism. Teams risk accepting AI-generated value hypotheses without proper experimentation. The AI’s prediction of value, a mere proxy in any respect, gets treated as value itself, creating perfectly optimized metrics dangerously detached from real-world success.

Mechanism Three: Distorted Feedback → Convergence and Homogenization

AI-mediated “insights” are persuasive and can crowd out direct customer contact, creating self-reinforcing loops that drift away from reality. Research shows the problem is real: AI systems that learn from their own outputs make bias worse (Ensign et al., 2018), and recommendation systems make everything more similar without actually being more useful (Chaney et al., 2018).

Product vision erosion occurs through this mechanism. AI excels at local optimization but struggles with breakthrough thinking. When teams rely heavily on AI for strategic direction, they risk optimizing for historical patterns while missing novel opportunities. The system, trained on its own outputs and user reactions, gradually filters out the diverse, outlier perspectives that spark true innovation.

Customer understanding degradation follows the same pattern. AI personas risk becoming more real to teams than actual customers. Product decisions risk getting filtered through algorithmic interpretation rather than direct engagement, severing the essential human connection that separates great products from technically competent failures.

Systemic Causes: Why Smart Organizations Enable These Failures

These mechanisms recur because incentives reward announcements over outcomes and speed over learning, while many teams lack uncertainty literacy, or the ability to distinguish correlation from causation and maintain falsifiability. Governance completes the trap: risk processes assume determinism, but AI is probabilistic.

Organizational factors create systematic blindness. Pressure for “data-driven” decisions without empirical validation becomes an organizational fetish (Brynjolfsson & McElheran, 2016). Companies risk worshipping the appearance of objectivity while abandoning actual evidence-based practice.

Cultural factors compound the problem. Technology worship, the belief that algorithms provide objective, unbiased solutions, replaces critical thinking. Anti-empirical patterns emerge where teams prefer algorithmic authority over evidence-based validation.

The NIST AI Risk Management Framework is explicit: trustworthy AI requires context-specific risk identification, documentation of assumptions, and continuous monitoring, none of which appears by accident (NIST AI RMF, 2023). These expectations must be made operational.

AI Risks And The Product Catastrophe: When Mechanisms Converge

When these risks converge in product contexts, the results can be predictable and devastating. Teams risk losing feedback loops with actual customers, replacing direct engagement with AI-mediated insights. Features risk getting optimized for historical metrics rather than future value creation. Roadmaps risk becoming exercises in pattern recognition rather than strategic vision.

Most dangerously, product decisions risk becoming technocratic rather than customer-centric. Research on algorithmic decision-making suggests that teams with technical AI literacy can gain disproportionate influence over strategic decisions (Kellogg et al., 2020). Data scientists and engineers may begin making product decisions. In contrast, Product Owners and Product Managers become AI operators, a dynamic observed in other domains where algorithmic tools shift control from domain experts to technical operators.

The most dangerous scenario occurs when multiple risks combine: Command-and-control organizational structures, plus technology worship, plus competitive pressure create an environment where questioning AI becomes career-limiting. In this context, product strategy risks being transferred from business leaders to technical systems without anyone explicitly deciding this should happen.

Evidence-Based Response to Mitigate AI Risks: Converting Suggestion into Learning

A credible response must be operational, not theatrical. Treat AI as a talented junior analyst—fast, tireless, occasionally wrong in dangerous ways—and wrap it with empiricism, challenge, reversibility, and transparency.

Empiricism by Default

Use the decision triplet (claim, test, action) on any AI-influenced decision; attach it to any product suggestion so you can inspect and adapt with evidence. This keeps speed while preserving learning.

- The Claim: What is the specific, falsifiable claim the model is making?

- The Test: How can we validate this with a cheap, fast experiment?

- The Action: What will we do if the test passes or fails?

This approach applies hypothesis-driven development principles to everyday product work, ensuring that AI recommendations become testable propositions rather than accepted truths.

Lightweight Challenge

Rotate a 15-minute “red team” to interrogate high-impact recommendations, such as alternative hypotheses, data lineage, and failure modes. Structured doubt measurably reduces automation misuse when paired with a standing Goodhart check: “Which proxy might be harming the mission?”

Repair the Feedback Loop

Mandate weekly direct user conversations for Product Managers, POs, and designers; AI may summarize but must not substitute. This counters known feedback-loop pathologies and recommender homogenization, maintaining the direct customer connection essential for breakthrough insights.

Reversibility and Resilience

Favor architectures and release practices that make change safe to try and easy to undo: canary releases and rollbacks; error-budget-driven change control; and reversible (two-way-door) decisions. For deeper vendor entanglements, use evolutionary architecture with fitness functions and the Strangler Fig pattern to keep an explicit “exit in 30 days” path.

These practices draw from Site Reliability Engineering principles where failure is assumed inevitable and systems optimize for rapid recovery rather than perfect prevention.

Transparency That Is Cheap and Default

Embrace log prompts, model identifiers, input sources, and one-sentence rationales for consequential decisions: This enables debugging drift and explaining choices when reality disagrees with predictions. It also aligns with NIST guidance on documentation and continuous monitoring.

Conclusion: Raising the Bar on Product Management

AI amplifies what your operating system already is. In a strong system, empirical, curious, transparent, it compounds learning; in a weak one, it accelerates confident error. The choice is not about faith in models, but whether you can convert probabilistic suggestions into reliable, auditable decisions without losing your product’s core.

The organizations that figure this out will build products that actually matter. Those who fail this test will optimize perfectly for irrelevance, creating systems that excel at metrics while failing to meet customer needs.

Keep ownership human. Keep loops short. Keep proof higher than confidence. Ship learning, not an irrational belief in technology.

Sources Relevant to AI Risks

- Amazon (2015). Shareholder letter (Type-1/Type-2 decisions)

- Brynjolfsson, E., & McElheran, K. (2016). The rapid adoption of data-driven decision-making. American Economic Review, 106(5), 133-139.

- Chaney, A.J.B., Stewart, B.M., & Engelhardt, B.E. (2018). How Algorithmic Confounding in Recommendation Systems Increases Homogeneity and Decreases Utility. RecSys ’18.

- DeepMind. Specification gaming: the flip side of AI ingenuity

- DeepMind (2022). Goal misgeneralisation

- Ensign, D., Friedler, S.A., Neville, S., Scheidegger, C., & Venkatasubramanian, S. (2018). Runaway Feedback Loops in Predictive Policing. PMLR.

- Fowler, M. Strangler Fig

- Google SRE Workbook. Canarying releases

- Google SRE Workbook. Error budget policy

- Kellogg, K. C., Valentine, M. A., & Christin, A. (2020). Algorithms at work: The new contested terrain of control. Academy of Management Annals, 14(1), 366-410.

- Lum, K., & Isaac, W. (2016). To predict and serve? Significance.

- Manheim, D., & Garrabrant, S. (2018). Categorizing Variants of Goodhart’s Law. arXiv:1803.04585.

- NIST (2023). AI Risk Management Framework (AI RMF 1.0)

- O’Reilly, B. (2013). How to Implement Hypothesis-Driven Development

- Parasuraman, R., & Manzey, D. H. (2010). Complacency and bias in human use of automation: An attentional integration. Human Factors, 52(3), 381-410.

- Parasuraman, R., & Riley, V. (1997). Humans and Automation: Use, Misuse, Disuse, Abuse. Human Factors.

- Thoughtworks (2014). How to Implement Hypothesis-Driven Development

- Thoughtworks Tech Radar. Architectural fitness functions.